Unveiling new ideas in prompt engineering, particularly chain of thinking and its variations.

REVEAL Dataset

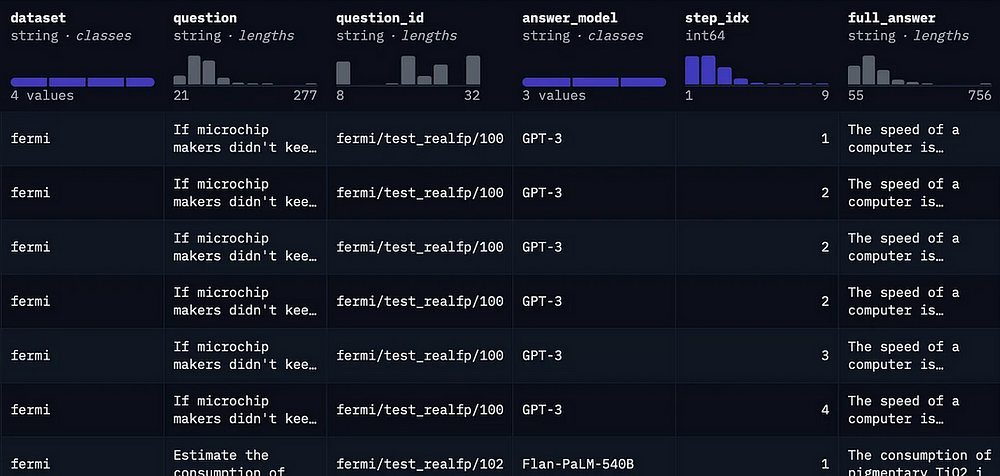

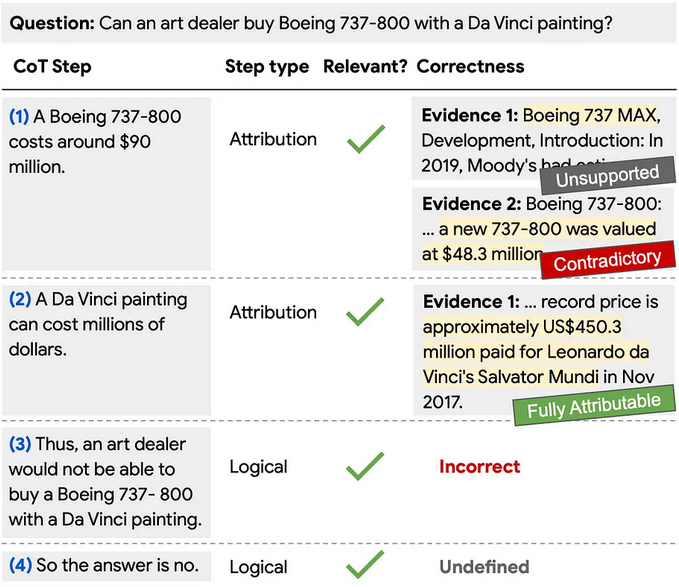

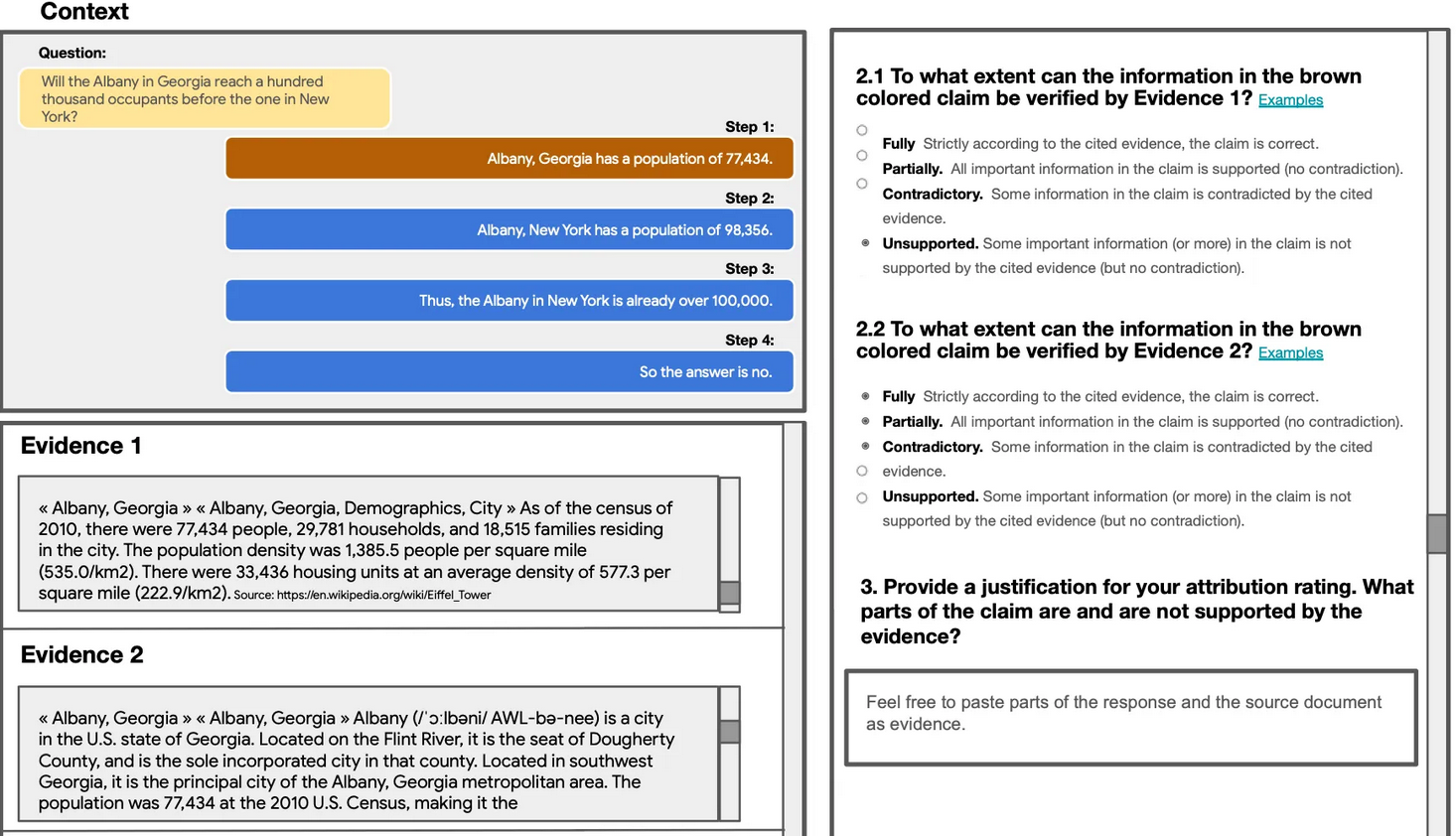

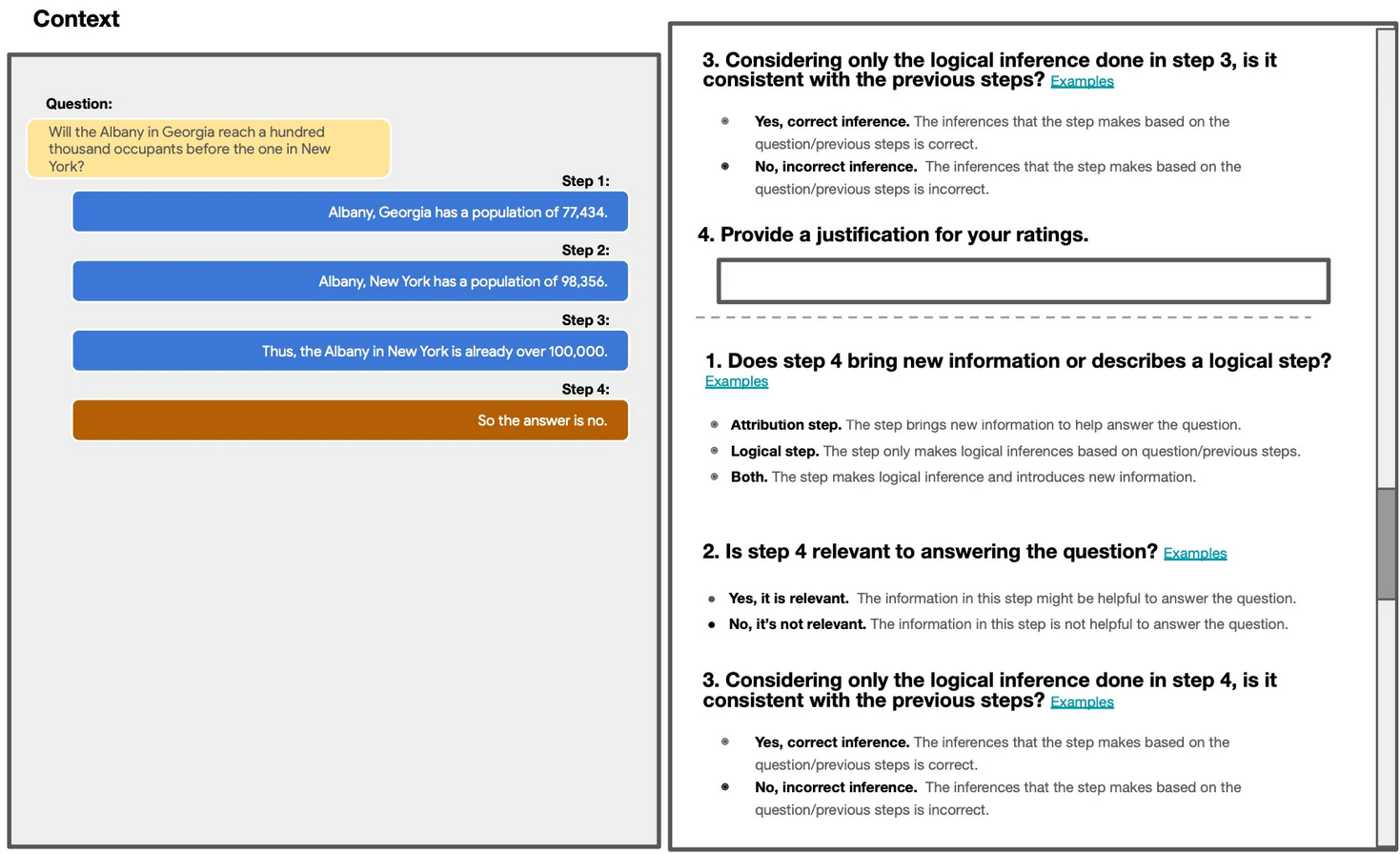

There have been numerous studies conducted on the topic of automated verification of LLM interactions. These methods evaluate LLM output and reasoning procedures in order to assess and enhance answer accuracy.[1]Google Research discovered that there are no fine-grained step-level datasets available for evaluating the chain of thought.[1]As a result, the REVEAL dataset is introduced, which is essentially a dataset for evaluating automatic verifiers of sophisticated CoT for open-domain question answering.[1]

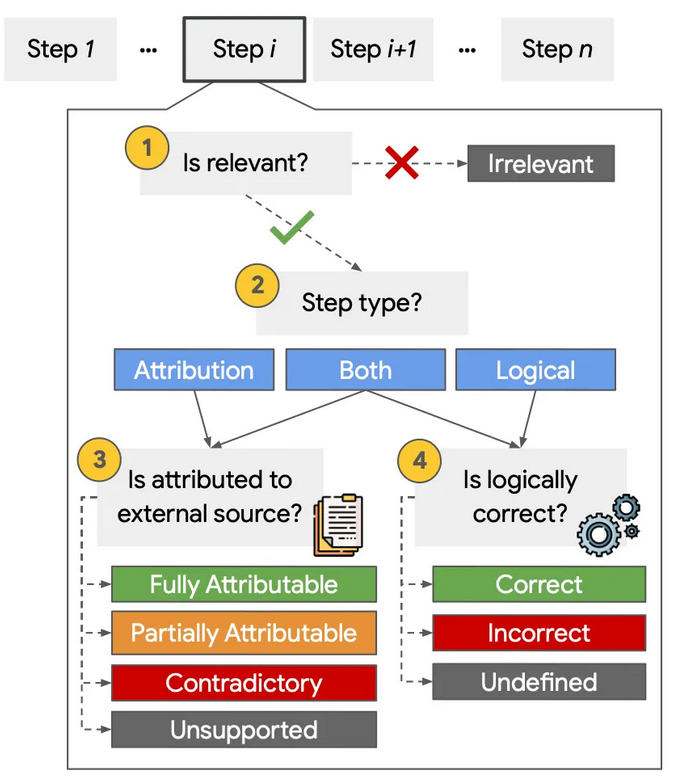

REVEAL includes detailed labels for relevance, attribution to evidence passages, and the logical correctness of each reasoning step in a language model's solution, spanning a wide range of datasets and cutting-edge language models.[1]

The obtained dataset serves as a benchmark for assessing the performance of automatic LM reasoning verification systems.[1]

Challenges

The study largely focuses on analyzing verifiers that examine evidence attribution to a specific source, rather than fact-checkers that retrieve evidence directly. As a result, REVEAL can only evaluate verifiers who act on specific given evidence.[1]However, it is important to note that the dataset's goal is to collect a varied range of situations in order to evaluate verifiers, rather than to examine the CoT itself using a "ideal" retriever. The labels in the dataset are well-defined for the particular evidence passages employed.[1]

Practical Examples

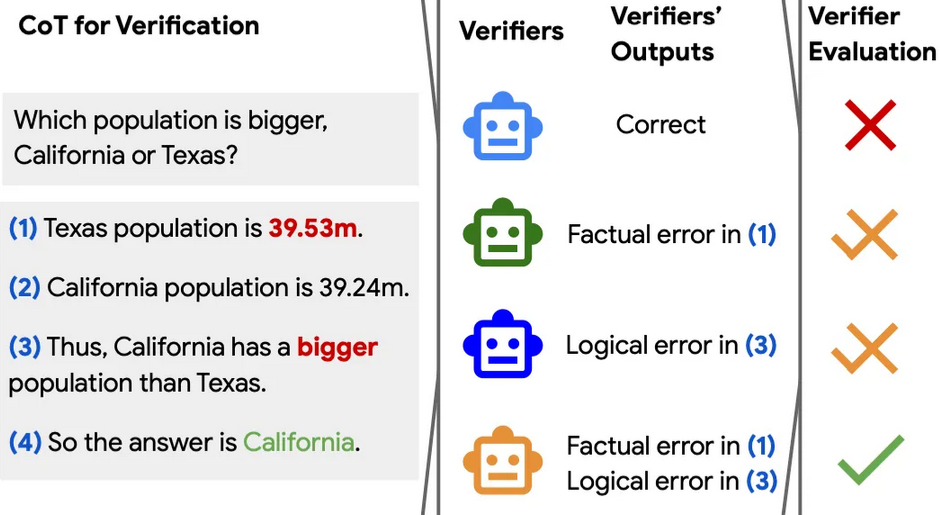

REVEAL is a standard for assessing reasoning chains in Chain-of-Thought format. REVEAL determines whether a reasoning chain provides a proper justification for the final response. It is vital to note that the answer may be correct even if the reasoning is erroneous.[1]

Annotation

Annotation was done using a complicated GUI that relied greatly on the annotator's opinion.[1]The annotation task is divided into two parts; one focuses on logic annotation (including relevance, step type, and logical accuracy scores). In conjunction with another challenge focusing on attribution annotations (including relevance and step-evidence attribution).[1]

Chain-of-Thought Alternatives

Concise Chain-of-Thought (CCoT) Prompting

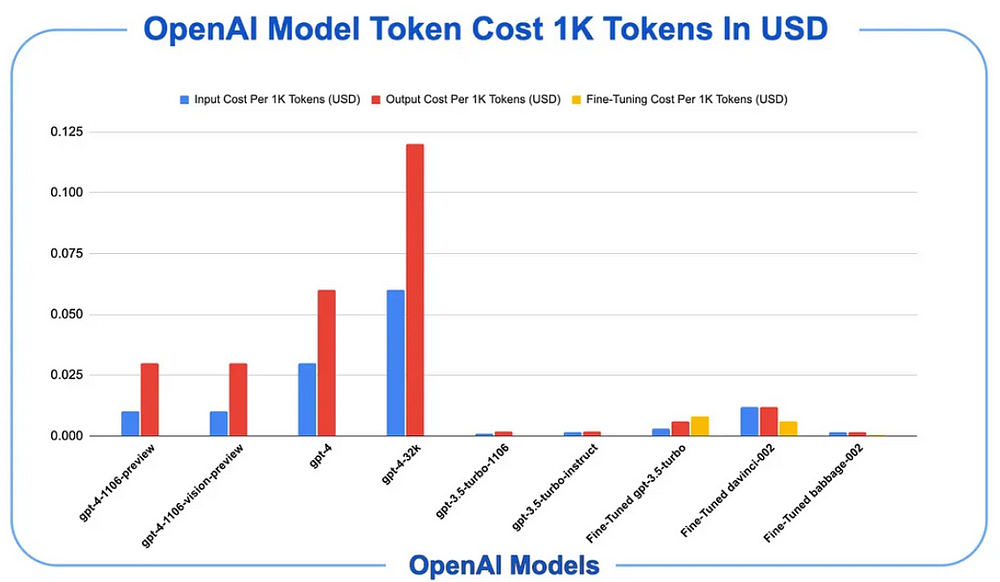

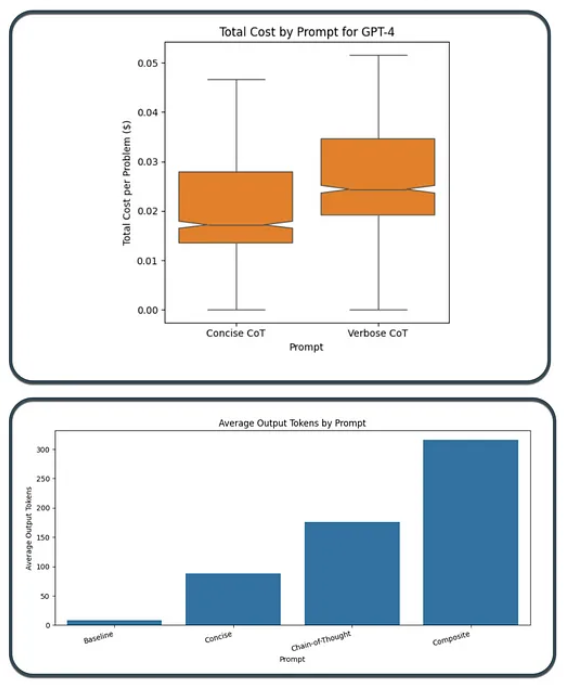

Traditional CoT requires more output tokens, but CCoT prompting is a prompt-engineering technique targeted at decreasing LLM answer verbosity and inference times.[2]For multiple-choice Question-and-Answer, CCoT reduces response length by 48.70%. As a result, CCoT reduces output token costs and provides more concise replies.[2]

The study also discovered that problem-solving performance remained consistent between the two methodologies, CoT and CCoT. For math tasks, CCoT has a performance penalty of 27.69%. Overall, CCoT reduces token costs by an average of 22.67%.[2]

Inference latency is also an issue, which can be mitigated to some extent by making answers shorter. This can be accomplished with no degradation in performance; the study discovers that CCoT has no performance penalty in this aspect.[2]

Limitations

The investigation only used GPT LLMs; it would be interesting to compare the performance of open-source and less competent LLMs. The study only used a single CoT and CCoT prompt. As a result, different combinations of CoT and CCoT cues may yield different outcomes.[2]Given the difference in prompt performance across activities, it appears that implementing user intent triage can be effective. User input is classified for use in orchestrating several LLMs, selecting the best relevant prompting approach, and so forth.[2]

Random Chain-Of-Thought Prompting

Given the majority of LLM-related studies published in recent months, a few themes have emerged. These trends represent a general consensus on which research is determining how effective generative apps are. [3]

1. Complexity

Complexity is being created in the processing, preparation, and delivery of inference data to the LLM. The overall strategy of breaking down a task into sub-tasks is becoming the de facto standard for conducting prompt engineering procedures such as self-explanation, planning, and other data-related tasks.[3]Applications built on LLMs make advantage of rapid pipelines, agents, chaining, and other features.[3]

2. LLM-Based Tasks

Some of the data and other outcome-related activities are delegated to the LLMs. This is a type of LLM orchestration in which actions that were previously monitored by humans or controlled through other processes are delegated to LLMs to handle.[3]For example, in situations when data is scarce, LLMs can be used to generate synthetic data, explanations, task decomposition, and planning. Contrastive chains and other features.[3]

3. In-Context Learning (ICL)

A new study contends that Emergent Abilities are not hidden or unpublished model capabilities waiting to be discovered, but rather new approaches to In-Context Learning that are being developed.[3]To successfully exploit in-context learning, highly contextual reference information must be supplied to the LLM during inference.[3]

4. Human Feedback

Recent research have begun to stress the need of a data-centric strategy that includes human-inspected and tagged data. Good data practices will become increasingly important, particularly as fine-tuning becomes more available.[3]

5. Inspectability & Observability

LLM-based implementations can be broadly classified into two types: gradient and nongradient.[3]A LLM can receive data in two stages: during model training (gradient) and at inference time (gradient-free).[3]

Model training constructs, modifies, and adapts the underlying ML model. This model is also known as a model frozen in time with a specific time stamp. This is typically an opaque process with little inspectability and observability.[3]Non-gradient techniques are preferred because of their transparency and observability.[3]

Inference occurs when the LLM is questioned and the model generates a response. This is also known as a gradient-free strategy because the underlying model is not trained or modified.[3]

Recent research and investigations have discovered that supplying context during inference is critical, and several ways are being used to give highly contextual reference data with the cue to nullify hallucination. This is also known as quick injection.[3]

Context and conversational structure can be given using RAG, prompt pipelines, Autonomous Agents, Prompt Chaining, and prompt engineering methods.[3]

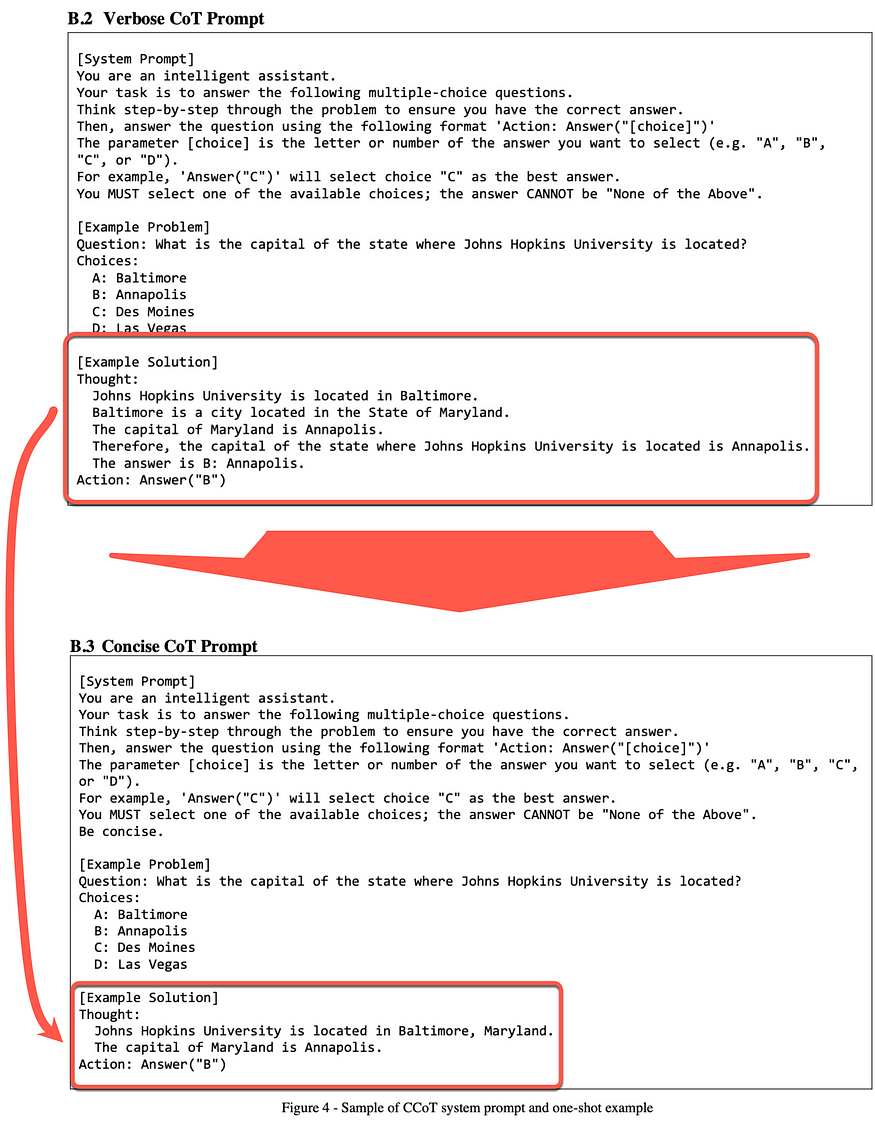

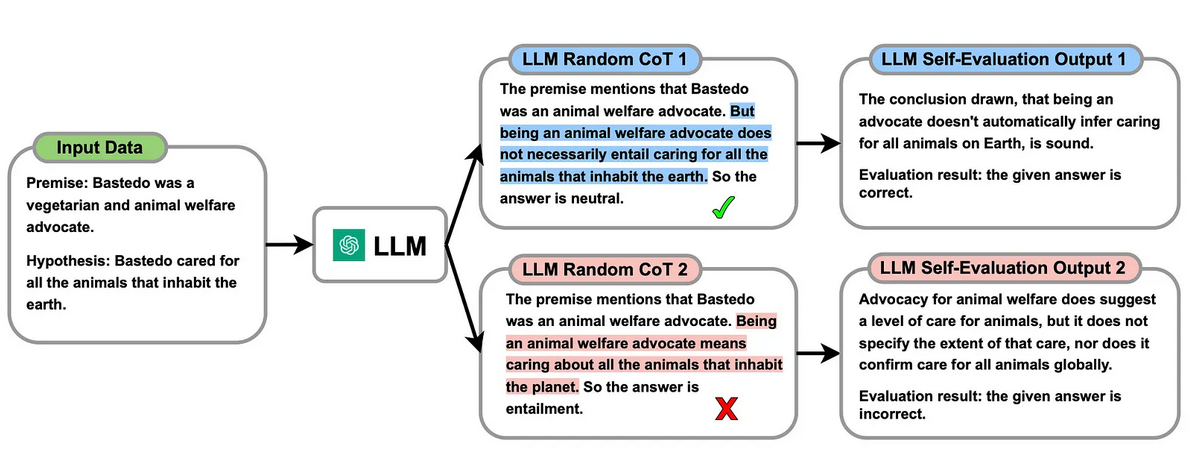

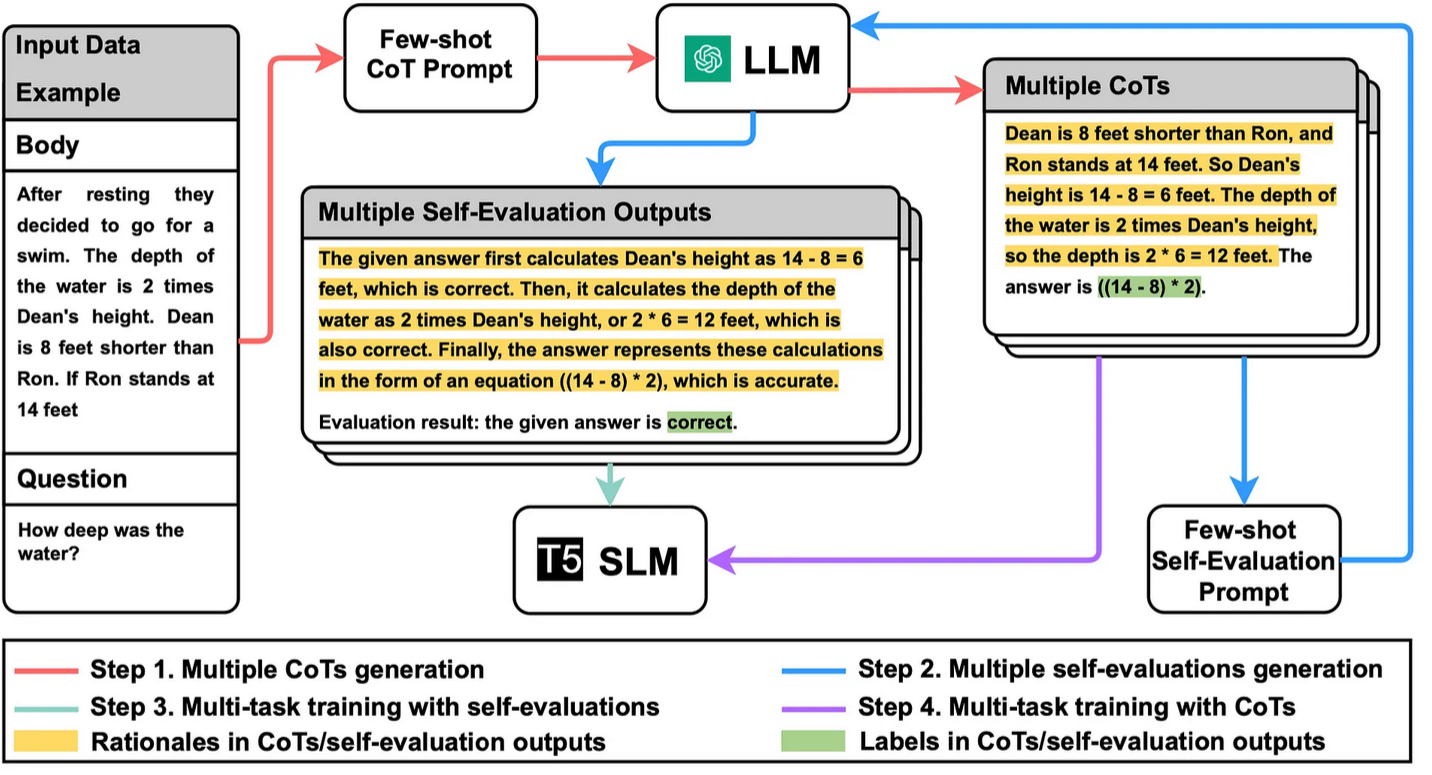

Random CoT

In Random CoT, the LLM generates multiple CoT responses as well as self-evaluations. These responses are generated using natural language during inference.According to the study, LLM human-like self-evaluation allows the LLM to assess the accuracy of the generated CoT reasoning and determine what is correct or incorrect.[3]

How Random CoT Prompting Work?

Step 1

The LLM produces many CoTs. An unlabeled dataset is utilized to create a CoT prompt template outlining how the task should be approached.[3]The prompt template includes instances of few-shot CoT. Following the CoT technique, each example consists of a triplet that includes example input, pseudo labels, and a user-provided explanation for the classifications.[3]

Step 2

Obtain several self-evaluation outputs from the LLM.[3]

Step 3

Train the SLM with several self-evaluation outputs, allowing it to discern between right and wrong.[3]

Step 4

Train the SLM with several CoTs to provide broad reasoning capabilities. After successfully implementing the self-evaluation capability, the attention switches to leveraging the numerous CoTs gained from the LLM, with the goal of training the SLM to internalise diverse reasoning chains.[3]This two-pronged training approach guarantees that the SLM is not simply parroting the capabilities, but is instead entrenched in introspective self-evaluation and a deep comprehension of various thinking, reflecting the LLM's superior cognitive faculties.[3]

The training technique for SLMs begins with distilling self-evaluation capability to set the groundwork for lowering the influence of errors in CoTs on SLMs, followed by incorporating complete reasoning capability through diversified CoT distillation.[3]

Distillation

This study demonstrates an important procedure known as distillation.[3]LLM distillation encompasses a variety of strategies that use one or more large models to guide a smaller model through a specified job. In this study, it is referred to as an SLM (Small Language Model).[3]

This method can be applied to less capable, smaller, cheaper, or less capable models. The model's NLG prowess and fundamental logic are utilized, but there is no knowledge-intensive NLP (KI-NLP) component.[3]

Again, data preparation is critical for this strategy; pseudo-labels created by the LLM can be used to guide smaller models, but human-annotated labels, or at least human supervision, are preferred.[3]

Beyond Chain-of-Thought LLM Reasoning

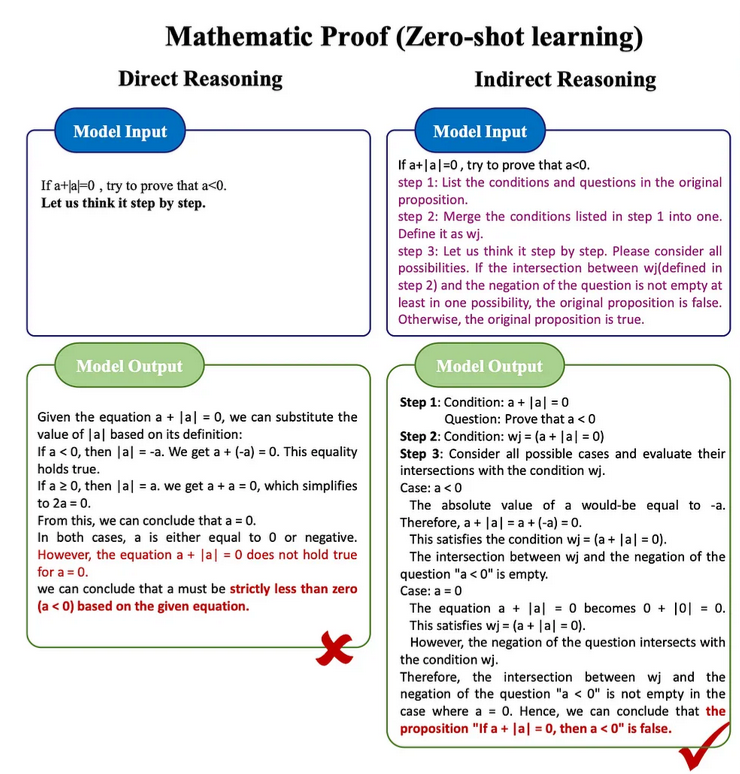

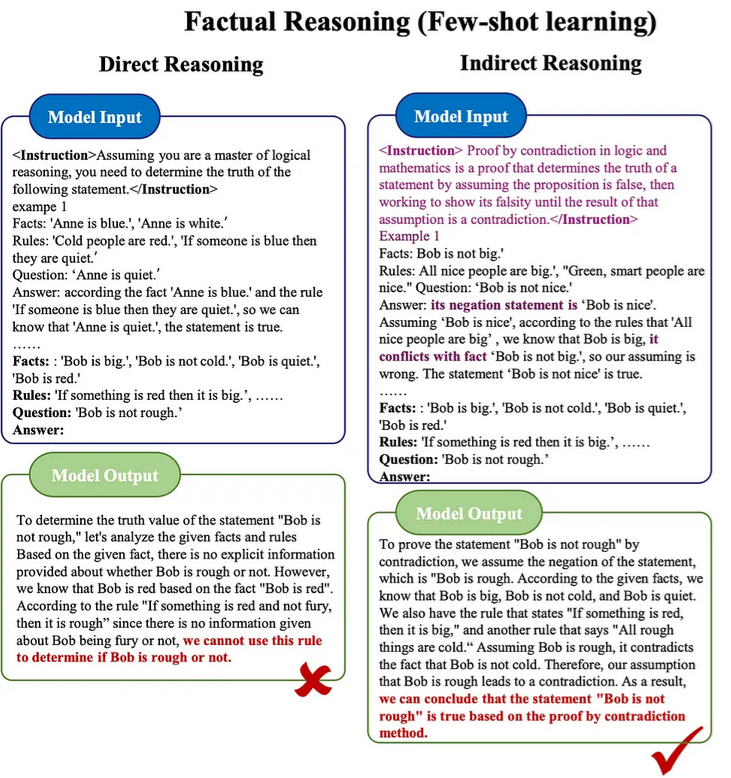

Recent research has addressed the need to improve the reasoning skills of Large Language Models (LLMs) beyond Direct Reasoning (DR) frameworks such as Chain-of-Thought and Self-Consistency, which may struggle with real-world problems that need Indirect Reasoning (IR).[4]

The paper offered an IR technique that uses the logic of contradictions for tasks such as factual reasoning and mathematical demonstration. The process entails enhancing data and rules with contrapositive logical equivalence and creating prompt templates for IR based on proof by contradiction.[4]

LLMs excel in language comprehension, content development, conversation management, and logical reasoning[4].

- Improved accuracy in factual reasoning and mathematical proof by 27.33% and 31.43%, respectively, compared to traditional DR approaches.

- Combining IR and DR approaches improves performance while showcasing the effectiveness of the proposed strategy.

Indirect Reasoning Prompt Structure

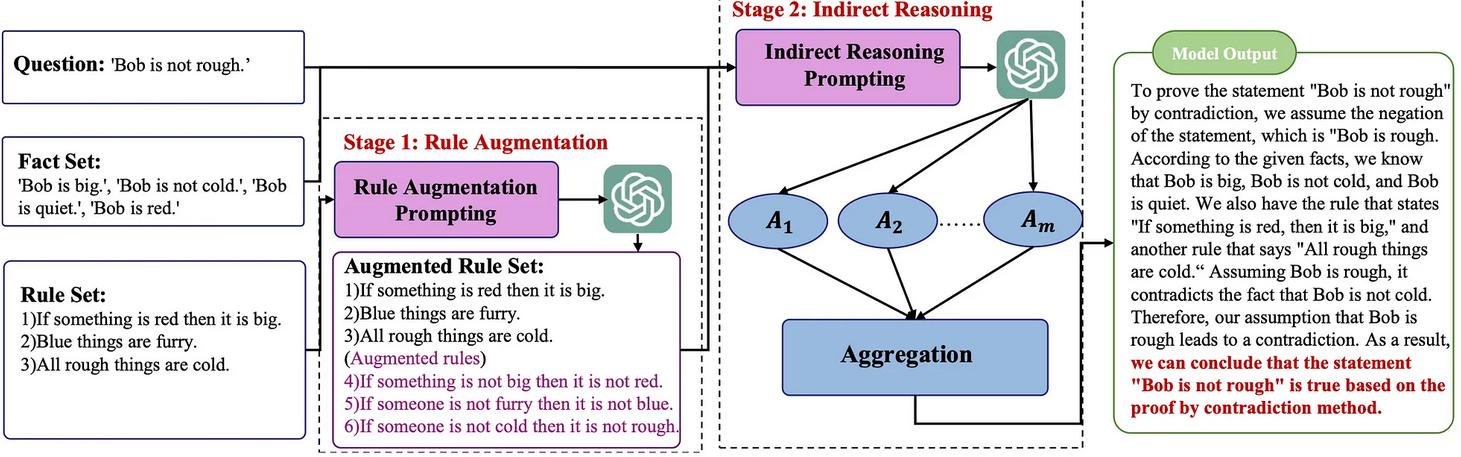

Rule Augmentation

LLMs frequently struggle to grasp complex regulations, which limits their capacity to apply them efficiently.[4]Consider the following[4].

Fact: Bob doesn't drive to work.

Rule: If the weather is good, Bob drives to work

Humans can use the equivalence of contrapositives to conclude that the rule is equivalent to: If Bob does not drive to work, the weather is not good, thus humans can deduce.[4]This permits humans to assume, based on the rule, that the weather is not good.[4]

LLMs may find this reasoning technique difficult; therefore, to remedy this issue, the study proposes adding the contrapositive of rules to the rule set.[4]

As a result, few-shot learning is being used in conjunction with in-context learning.[4]

Prompt template:

# <Instruction>The contrapositive is equivalent to the original rule,

and now we need to convert the following rules into their contrapositives.

</Instruction>

# Example 1

# Rule: [rule1]

# Contrapositive: [contrapositive1]

...

# Rules: [rules]

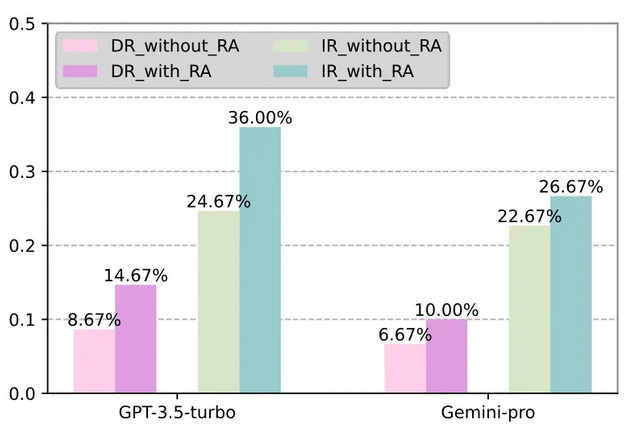

# Contrapositives:Performance

It is clear that both models shown below have significantly improved performance; however, the jump in improvement comes from the GPT.3.5 turbo on the IR/RA scenario.[4]

Chain Prompting Alternatives

Chain-of-Symbol Prompting (CoS)

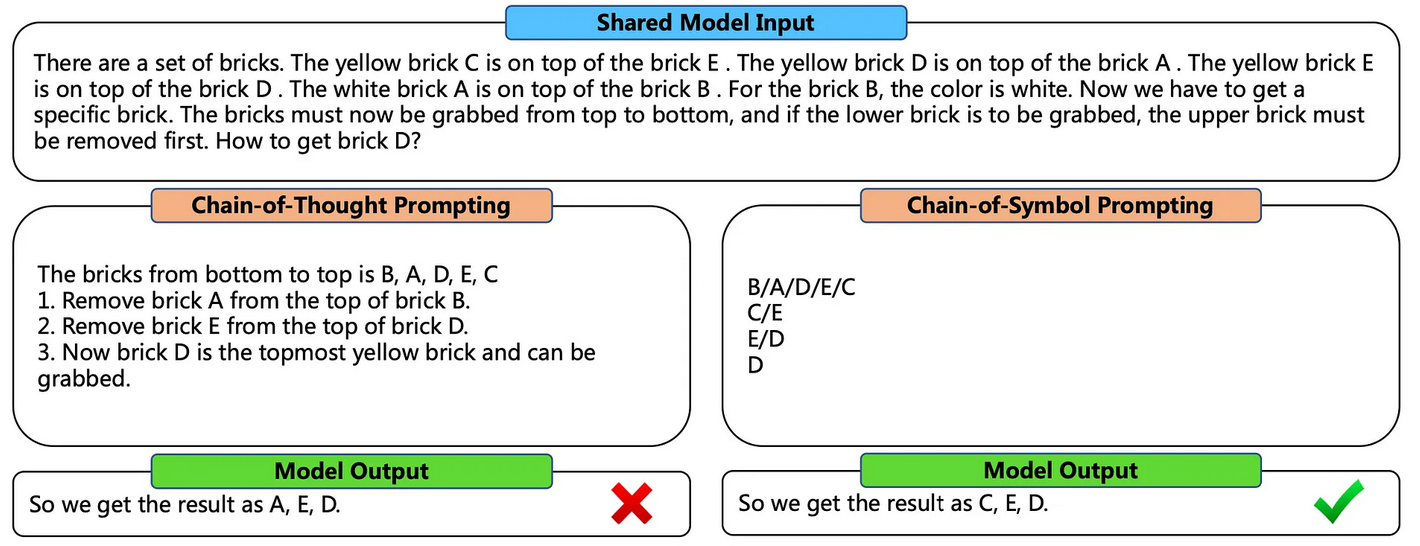

Conventional Chain-of-Thought prompting is helpful for LLMs in general, but its performance in geographical contexts has mainly gone unexplored.[5]

This study looks into the performance of LLMs for difficult spatial thinking and planning tasks, employing natural language to simulate virtual spatial settings.[5]

LLMs have difficulties in processing spatial relationships in textual prompts, raising the question of whether natural language is the best representation for complicated spatial contexts.[5]

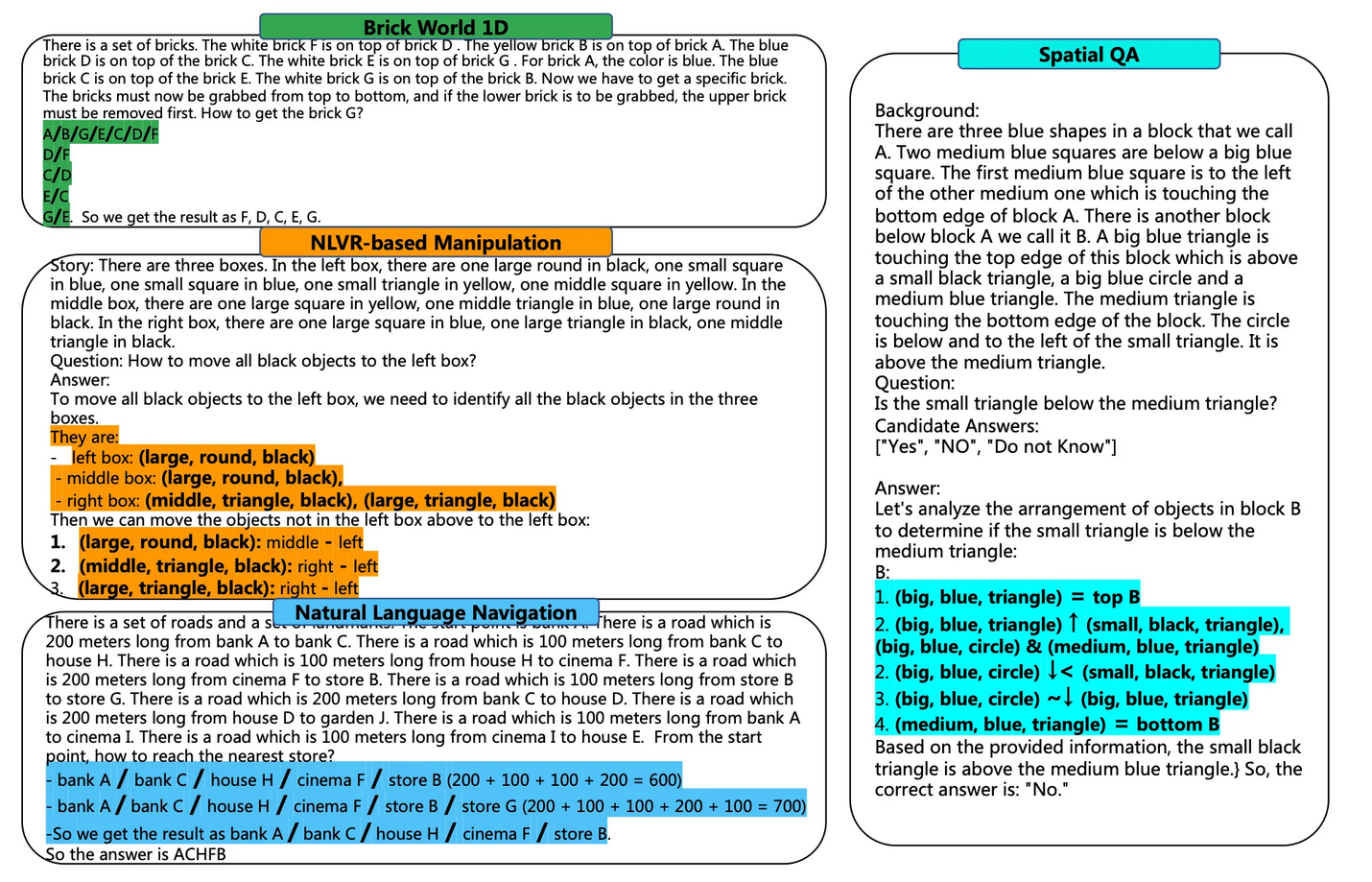

Chain-of-symbol prompting (CoS) refers to spatial links between condensed symbols during chained intermediate thought phases. CoS is simple to use and does not require further LLM training.[5]

CoS beats Chain-of-Thought (CoT) Prompting in natural language across three spatial planning tasks and an existing spatial quality assurance benchmark. CoS improves accuracy by up to 60.8% (from 31.8% to 92.6%), while also reducing the amount of tokens utilized in prompts.[5]

Considerations

Converting spatial tasks into symbolic representations can add complexity and computing overhead to the process.[5]To some extent, describing a virtual geographical world for the LLM to travel is a form of symbolic reasoning in LLMs.[5]

Combining symbolic thinking and spatial relationships is a powerful combination in which symbolic descriptions are linked to spatial representations.[5]

The usefulness of this technique is clear; nonetheless, the difficulty is to generate good CoS prompts at scale, automatically, without operator involvement or prompt scripting.[5]

CoS

It has been demonstrated that LLMs have outstanding sequential textual reasoning capacity during inference, which results in a considerable improvement in their performance when confronted with reasoning questions described in natural languages.[5]This phenomena is clearly shown by the Chain of Thought (CoT) approach, which gave rise to the phenomenon known as Chain-of-X.[5]

The graphic below compares Chain-of-Thought (CoT) with Chain-of-Symbol (CoS), demonstrating how LLMs may handle complex spatial planning tasks with better performance and token utilization.[5]

- Automatically prompt the LLM to generate a CoT demonstration using a zero-shot approach.

- If the resulting CoT demonstration contains any problems, correct them.

- Replace the spatial relationships stated in natural languages with random symbols in CoT, keeping only objects and symbols and removing all other descriptions.

Advantages of CoS

CoS, as opposed to CoT, allows for more succinct and simplified operations.[5]CoS's structure facilitates quick analysis by human annotators.[5]

CoS gives a more accurate representation of spatial issues than plain language, making it easier for LLMs to learn.[5]

CoS lowers the number of input and output tokens.[5]

The graphic below contains prompt engineering examples for Brick World, NLVR-based Manipulation (Natural Language Visual Representation), and Natural Language Navigation. Chains of symbols are highlighted.[5]

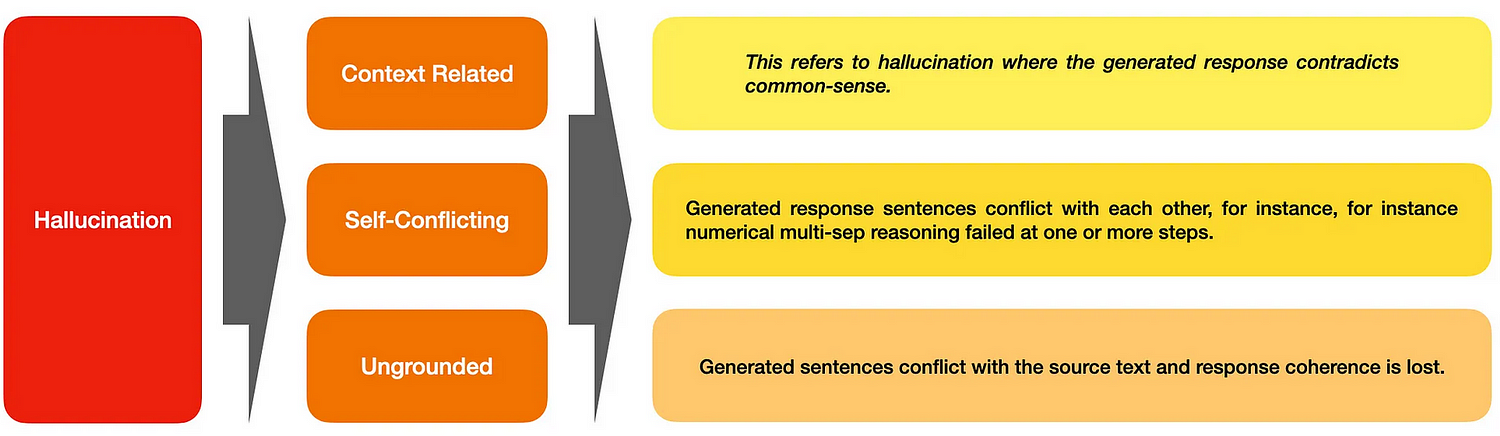

Chain Of Natural Language Inference (CoNLI)

It is critical for LLM text-to-text generation to produce factually consistent results in regard to the source material.[6]The study finds several elements that create hallucinations. These include: [6]

- Long input context,

- Irrelevant context distraction, and

- Complicated reasoning.

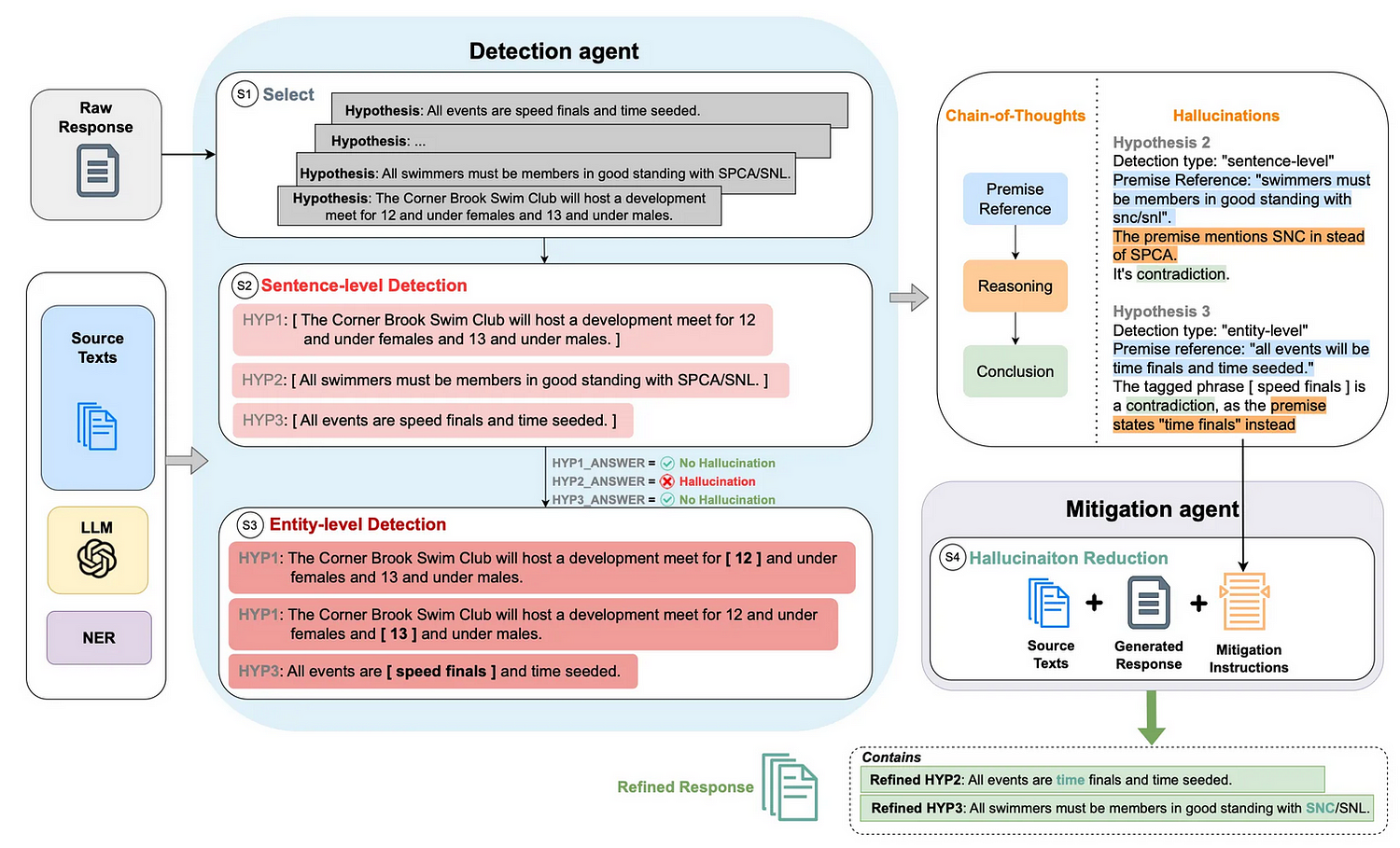

One of the goals of CoNLI was to rely solely on LLMs with no fine-tuning or domain-specific prompt engineering. CoNLI is defined as a simple plug-and-play system that may be used to detect and reduce hallucinations while performing competitively in a variety of settings.[6]

Ecosystem Constraints

It should be noted that one of the study's goals was to reduce hallucinations in cases where the maker did not have complete control over the LLM model or could not use additional external knowledge. It is vital to highlight that the study's foundation technique is based on four principles: contextual retrieval corpus, self-criticism at inference, multi-agent debate, and decoding.[6]Consider the figure below, which depicts the proposed CoNLI framework with a real-world example. [6]

If no hallucinations are discovered, the system will proceed to detailed entity-level detection. Detection reasonings will serve as mitigating directions.[6]

This is the second study in a short amount of time that uses sentence- and entity-level detection to ensure generated text coherence and veracity.[6]

Entity detection is an effective strategy since it covers a wide variety of conventional named entities and may be performed out of the box.[6]

Considerations

Some important considerations from the study[6]:

- CoNLI was evaluated on text abstractive summarization and grounded question-answering using both synthetically generated and manually annotated data. [6]

- The study focused on text-to-text datasets, which are commonly used for real-time and offline processing of unstructured data and interactions.[6]

- Astute prompt engineering techniques can eliminate hallucinations.[6]

- However, grounding with a contextual corpus remains highly effective.[6]

- I like how NLU is making a comeback to verify the LLM output. The framework conducts an NLU pass on the resulting text to validate its truthfulness.[6]

Active Prompting with Chain Prompting

There are emergent abilities being discovered in Large Language Models (LLMs), which are essentially alternative ways to exploit in-context learning (ICL) within LLMs. LLMs thrive at ICL, and recent research has demonstrated that with excellent task-specific prompt engineering, LLMs may provide high-quality results.[7]Question and answer activities using example-based prompting and CoT reasoning are especially effective. A recent study expressed worry that these prompt examples may be too inflexible and fixed for various activities.[7]

Active Prompting uses a methodology that has gained favor in recent studies and developments. This process consists of the following elements[7]:

- Humans annotated and reviewed the data.

- Using one or more LLMs for intermediate tasks before the final inference.

- Adding flexibility to the rapid engineering process necessitates the introduction of complexity and some form of framework for managing the process.

The Four Steps Of Active Prompting

- Uncertainty Estimation

The LLM is queried a predetermined amount of times to provide potential answers to a set of training questions. These answer and question sets are constructed in a decomposed manner using intermediary processes. An uncertainty calculator is utilized based on the results of an uncertainty metric.[7]

2. Selection

The most uncertain queries are chosen for human review and annotation once they have been ranked by uncertainty.[7]

3. Annotation

Human annotators are employed to annotate the chosen ambiguous questions.

3. Final Inference

The freshly annotated exemplars are used to make final inferences for each question.[7]

Considerations

- When using this strategy, the inference cost and token use will increase because an LLM is used to calculate uncertainty.[7]

- This work demonstrates the relevance of a data-centric approach to applied AI via an RLHF strategy, in which training data is generated through a human-supervised process that is AI-accelerated.[7]

- AI can speed up human annotation by reducing noise and producing clear training data.[7]

- This strategy is leading us to a more data-focused method of working, including data discovery, design, and development.[7]

- As this type of application grows, a data productivity studio or latent space will be necessary to speed the data discovery, design, and development processes.[7]

Contribution

The contribution of the study is threefold:

- A careful procedure of selecting the most valuable and relevant information sets (questions and answers) for annotation. This is done while decreasing human data-related workloads using an AI-accelerated procedure.[7]

- The development of a reliable collection of uncertainty metrics.[7]

- On a variety of reasoning tasks, the suggested technique outperforms competing baseline models by a significant margin.[7]

Baselines

It is interesting to notice that this strategy has four methods serving as its primary baselines:

- Chain-of-thought (CoT) (Wei et al., 2022b): This method uses normal chain-of-thought prompting and provides four to eight human-written examples that represent a series of intermediate reasoning processes.[7]

- Self-Consistency (SC): An improved variant of CoT that differs from greedy decoding. Instead, it takes a sample of reasoning routes and chooses the most common response.[7]

- Auto-CoT (Zhang et al., 2022b) is a strategy that uses zero-shot prompting to cluster exemplars and provide rationales.[7]

- Random-CoT: Random-CoT serves as a baseline for Active-Prompt and uses the same annotation technique. The sole distinction is that it uses a random selection mechanism for questions from the training data during annotation instead of the specified uncertainty measures.[7]

More On Chain Prompting

A number of recent publications have demonstrated that, when given user instructions, Large Language Models (LLMs) can decompose difficult issues into a series of intermediate steps.[7]This fundamental notion was initially established in 2022 through the concept of Chain-Of-Thought (CoT) prompting. The main assumption of CoT prompting is to imitate human problem-solving strategies, in which we break down complex tasks into smaller steps.[7]

The LLM then handles each sub-problem with focused attention, limiting the likelihood of missing important facts or making incorrect assumptions. The division of tasks makes the LLM's operations less opaque, introducing transparency.[7]

More Chain-Of-Thought-like methodologies have been introduced in the following areas:

Auto-Cot, Program-of-Thoughts, MultiModal-CoT, Tree-of-Thought, Graph-of-Thought, Algorithm-of-Thoughts…cbarkinozer.blogspot.com

Prompt Pipelines

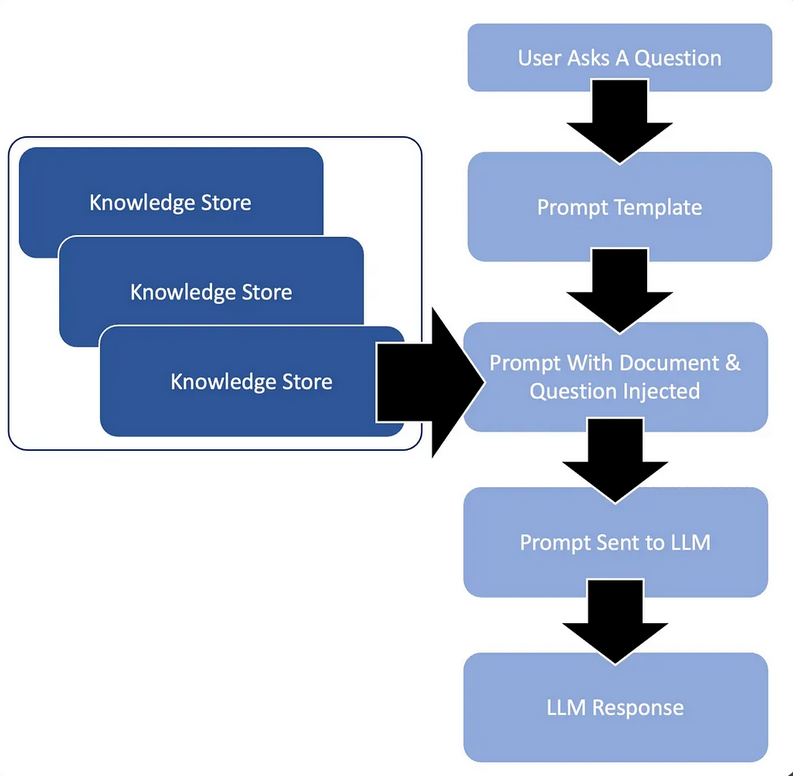

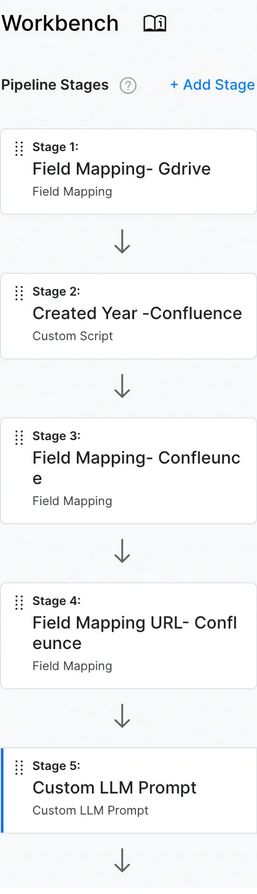

Autonomous agents and prompt chaining typically include interaction between the user and the generative AI application. As a result, a dialogue between the interface/application and the user occurs, with several dialog turns.[8]

A prompt pipeline is a series of stages or events that are started or triggered in response to a specified event or API call.[8]

Considering the image above, the purpose of a prompt pipeline GUI is to provide a user-friendly interface for establishing a sequence of events. This method of constructing prompt pipelines allows components to be easily reused.[8]

Prompt Pipelines augment prompt templates by automatically adding contextual reference material into each prompt.[8]

Entity Extraction uses natural language processing (NLP) techniques to detect named entities in the source field.[8]

Semantic Meaning Analysis is a technique for understanding and interpreting the meaning and structure of words, signs, and sentences.[8]

Custom Scripts allows you to create scripts to do any field mapping task, such as deleting or renaming fields.[8]

The majority of prompt pipelines begin with a user request. The request is sent to a specific prompt template, which is populated with highly contextual information for inference.[8]

Haystack’s Programable Prompt Pipelines

The leap from prompt chaining to autonomous agents is too large. This hole is filled by intelligent, programable prompt pipelines.[9]And, as I've mentioned several times recently, the difficulty with traditional chatbot architectures and frameworks is that you need to structure the user's unstructured conversational input. The structured data generated by the chatbot and returned to the user must then be unstructured again in the next abstraction layer.[9]

Input Data

OpenAI organized input data designed for chatbots or multi-turn dialogs. As a result, the chat mode has taken precedence over other modes such as edit and completion. Both of these are due for depreciation soon.[9]Another example of structure being offered is the new OpenAI Assistant capability, which takes a more conversational approach.[9]

The chat mode input includes a description of the chatbot's tone, function, ambit, and other characteristics. The user and system messages are cleanly divided for each dialog turn.[9]

Output Data

The output data from the OpenAI Assistant function call is in JSON format. The structure of the JSON document is defined, as is the description of each field within it. As a result, the LLM extracts intents, entities, and strings from the user input and assigns them to different fields in the preset JSON document.[9]

The JSON creation functionality is also fascinating, however I notice a vulnerability in the JSON format. In this implementation, the output data is generated in the form of JSON, but its structure is not consistent or unaltered. A JSON document is designed to provide a uniform output of data that can be consumed by downstream systems such as APIs. So modifying the JSON structure negates the purpose of creating JSON output in the first place. Seeding is the most popular workaround for keeping JSON static.[9]

Vulnerability

When introducing structure such as JSON output, there are various considerations, including the requirement for control over how the JSON is constructed and the quality of the JSON generated.[9]Makers want to be able to create a JSON structure and then manage how and when data is segmented and assigned to it. The challenge is to include checks and validation procedures along the way, as well as to bring a level of intelligence and complexity to manage the implementation process. As a result, Haystack's implementation serves as an excellent model for future Generative AI applications.[9]

The Short Comings Of OpenAI and JSON Function Calling

The name of Function Calling is actually somewhat deceptive. If you select function calling as the OpenAI LLM output, the LLMs do not really call a function, and there is no integration with a function. The LLM simply outputs a JSON document.[9]As previously stated in this post, not only is it impossible to fully manage the structure of a JSON document, but you may want to have many JSON documents, decision nodes, or some layer of intelligence within the application.[9]

As a result, the level of simplicity portrayed in OpenAI is ideal for getting users started and developing a grasp of the fundamental principles.[9]

HayStack’s Solution

Prompt chaining is primarily sequence and dialog-turn based. And the leap to Autonomous Agents is too large, in the sense that autonomous agents go through numerous internal sequences before arriving at a final answer. So there are cost, delay, and inspectability/observability issues.[9]For Generative AI Apps (Gen-Apps) implementations, different prompt pipelines can be exposed as APIs that can be invoked along the prompt chain. This concept added flexibility to Prompt Chaining, resulting in a bridge between chaining and agents.[9]

Prompt template example[9]:

from haystack.components.builders import PromptBuilder

prompt_template = """

Create a JSON object from the information present in this passage: {{passage}}.

Only use information that is present in the passage. Follow this JSON schema, but only return the actual instances without any additional schema definition:

{{schema}}

Make sure your response is a dict and not a list.

{% if invalid_replies and error_message %}

You already created the following output in a previous attempt: {{invalid_replies}}

However, this doesn't comply with the format requirements from above and triggered this Python exception: {{error_message}}

Correct the output and try again. Just return the corrected output without any extra explanations.

{% endif %}

"""

prompt_builder = PromptBuilder(template=prompt_template)This example for Haystack shows how an application’s interaction with a LLM needs to be inspectable, observable, scaleable, while consisting of a framework to which complexity can be added.[9]

There has been much focus on prompt engineering techniques which improves LLM performance by leveraging the In-Context Learning (ICL) which includes Retrieval Augmented Generation (RAG) approaches.[9]

Least To Most Prompting

Least To Most Prompting for Large Language Models (LLMs) allows them to handle complicated reasoning.[10]

When the problem the LLM must answer is more difficult than the examples provided, you can use least to most prompting rather than chain of thought prompting.[10]

To do least to most prompting[10]:

- Decompose a complex problem into a series of simpler sub-problems.

- And subsequently solving for each of these sub-questions.

The answers to earlier subproblems make it easier to solve each one. Thus, least to most prompting is a technique that employs a gradual sequence of suggestions to reach a final conclusion.[10]

Least-to-most prompting can be used with other prompting methods, such as chain-of-thought and self-consistency. For certain activities, the two stages of least-to-most prompting can be combined to provide a single-pass prompt..[https://arxiv.org/pdf/2205.10625.pdf]

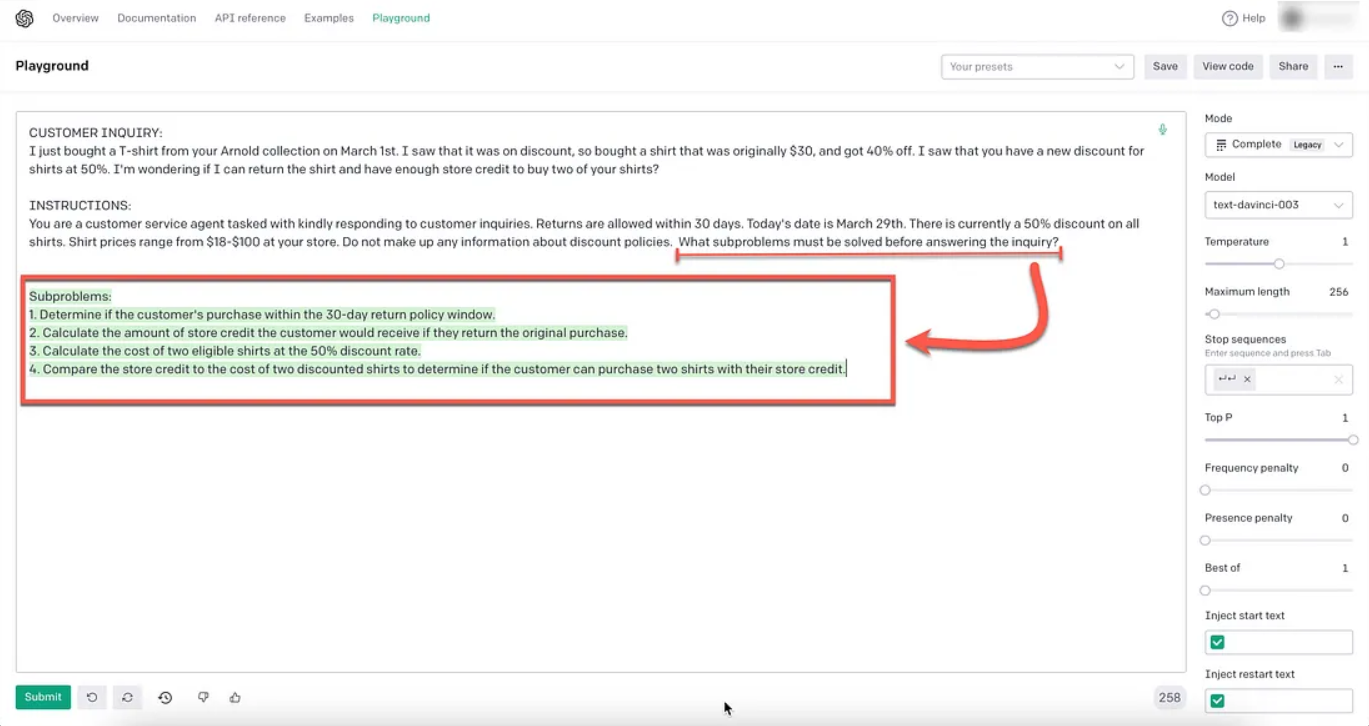

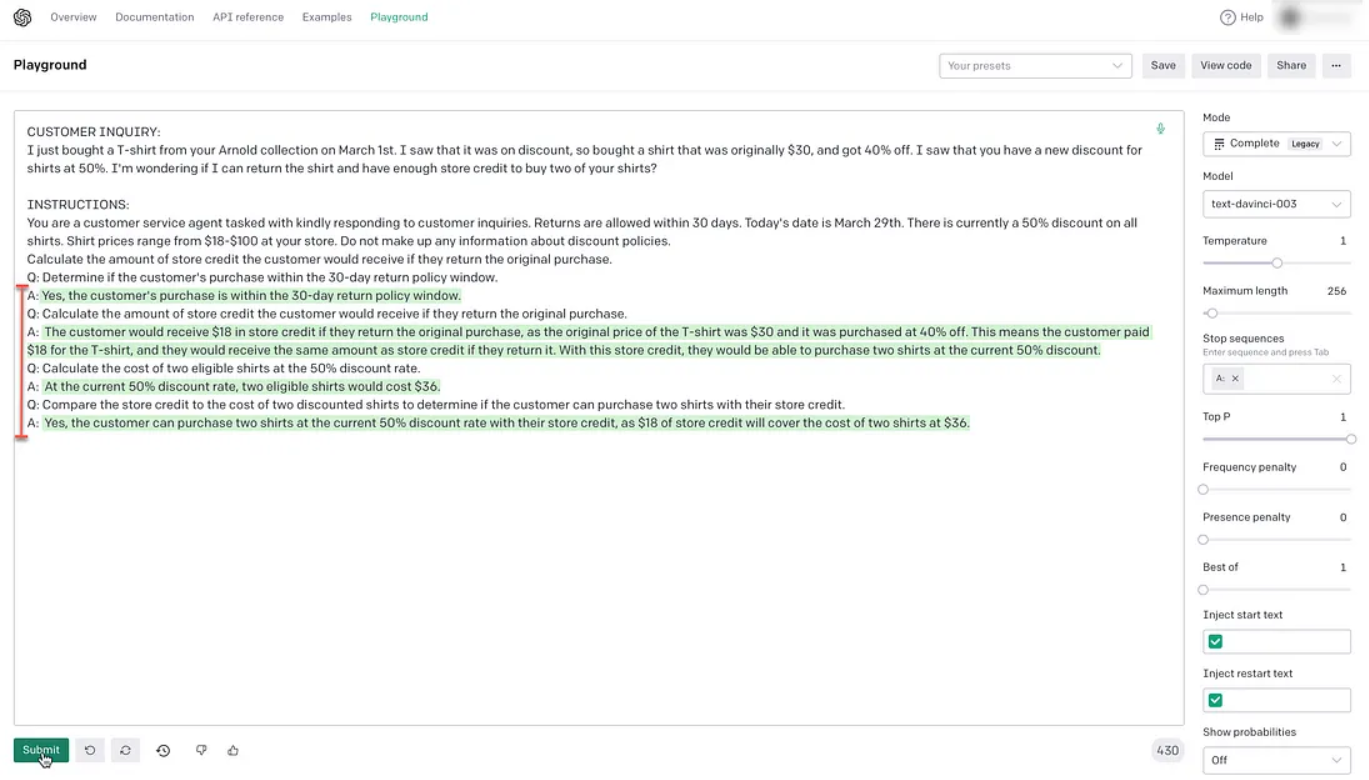

The following returns a false answer.[10]:

CUSTOMER INQUIRY:

I just bought a T-shirt from your Arnold collection on March 1st.

I saw that it was on discount, so bought a shirt that was originally $30,

and got 40% off. I saw that you have a new discount for shirts at 50%.

I'm wondering if I can return the shirt and have enough store credit to

buy two of your shirts?

INSTRUCTIONS:

You are a customer service agent tasked with kindly responding to

customer inquiries. Returns are allowed within 30 days.

Today's date is March 29th. There is currently a 50% discount on all

shirts. Shirt prices range from $18-$100 at your store. Do not make up any

information about discount policies.

Determine if the customer is within the 30-day return window.The first step is to ask the model the following question.[10]:

What subproblems must be solved before answering the inquiry?

As a result, the LLM generates four subproblems that must be answered before the instruction can be finished.[10]

The prior prompts are included for reference and serve as a few-shot training tool. The scenario presented to the LLM is unclear, yet the LLM performs an excellent job of following the procedure and arriving at the correct solution.[10]

Three caveats to add to this are:

- These three prompting approaches can be achieved using an autonomous agent. Here is an article about the Self-Ask technique, which makes use of a LangChain Agent. The usage of an Agent provides a high level of efficiency and autonomy.[10]

- The goal of these approaches is the following: if the LLM does not have a direct solution, it can utilize reasoning and deduction to use existing information to obtain the proper conclusion.[10]

- The Least-to-Most approaches connect these subtasks together. However, chaining is vulnerable to cascading, also known as Prompt Drift. The idea that an error or inaccuracy in the LLM gets passed from prompt to prompt in the chain.[10]

Supervised Chain-Of-Thought Reasoning Mitigates LLM Hallucination

Implementing natural language reasoning enhanced Large Language Model (LLM) outcomes greatly, resulting in less halicunation. [11]

Detecting & Mitigating Hallucination

There are three methods to mitigating hallucination[11]:

- Contextual References:

Even for Natural Language Models (LLMs), a contextual reference is required to improve accuracy and avoid false resolves. Even a modest bit of contextual information embedded in a prompt can greatly improve the accuracy of automated queries. [11]

2. Generative Prompt Pipeline:

Prompt Pipelines augment prompt templates by automatically adding contextual reference material into each prompt. [11]

3. Natural Language Reasoning:

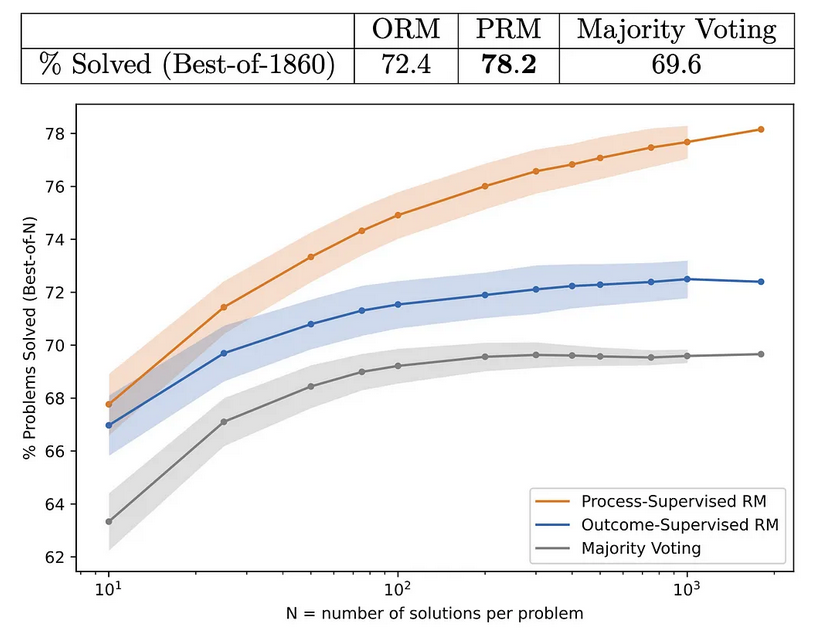

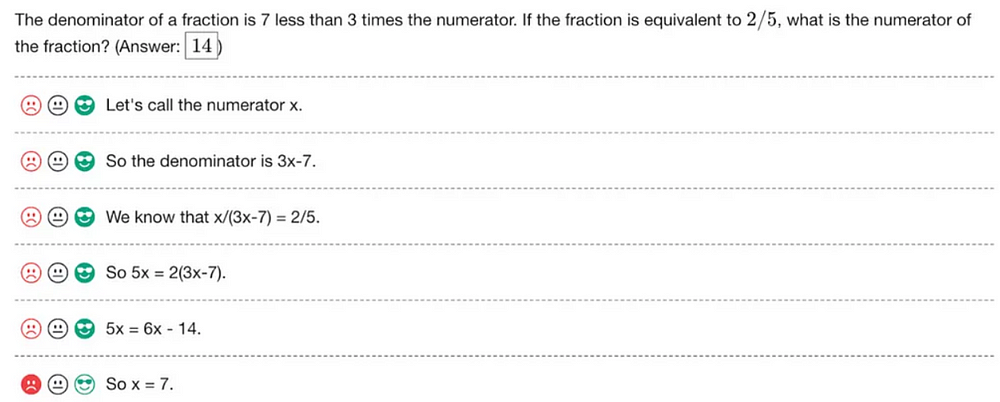

For starters, chain-of-thought reasoning enhances the overall performance and accuracy of LLMs. [11]The graph below shows that process monitoring significantly improves accuracy when compared to outcome supervision. Process supervision also helps the model adhere to a chain-of-thought reasoning pattern, resulting in interpretable reasoning. [11]

OpenAI discovered that there are no straightforward ways to automate process supervision. As a result, they depended on human data labellers to carry out process monitoring, specifically labeling the accuracy of each step in model-generated solutions.[11]

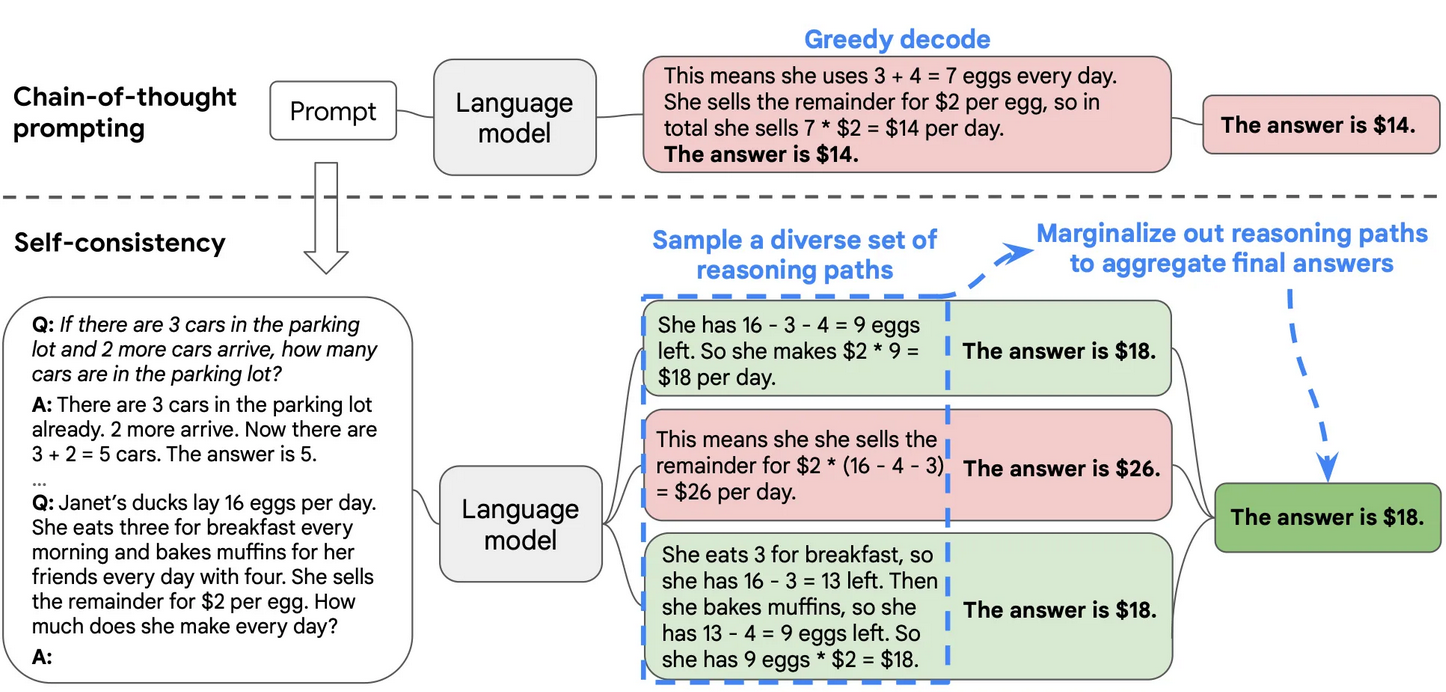

Self-consistency for Chain Prompting

The assumption of self-consistency is based on the non-deterministic nature of LLMs, which generally create multiple reasoning arguments when confronted with a problem.[12]The study describes Self-Consistency Prompting as a straightforward and effective method for improving accuracy in a variety of arithmetic and common-sense reasoning tasks across four big language models of varied dimensions. The research shows clear accuracy gains; however, the process of selecting multiple sets of reasoning paths and marginalizing aberrations in reasoning to arrive at an aggregated final answer will necessitate the use of logic and some amount of data processing.[12]

1. Prompt a linguistic model with chain-of-thought (CoT) prompting.

2. To generate a wide range of reasoning paths, replace the "greedy decode" in CoT prompting with sampling from the decoder of the language model; and

3. Marginalize the reasoning paths and aggregate by selecting the most consistent response from the final answer set.

Chain-Of-Verification (CoVe)

A recent study identified a new strategy (CoVe) to overcome LLM hallucination using a novel use of rapid engineering.[13]

“We find that independent verification questions tend to provide more accurate facts than those in the original long-form answer, and hence improve the correctness of the overall response.”- [https://arxiv.org/abs/2309.11495]

The Chain-of-Verification (CoVe) technique thus accomplishes four main steps:- Generate Baseline Response: For a given query, use the LLM to generate the response.[13]

- Plan Verifications: Given the query and baseline response, create a list of verification questions that may be used to self-analyze whether there are any errors in the original response.[13] During verifications, answer each question in turn and compare it to the original response to ensure consistency and accuracy.[13]

- Final Verified answer: Using the detected discrepancies (if any), create a revised answer that incorporates the verification results.[13]

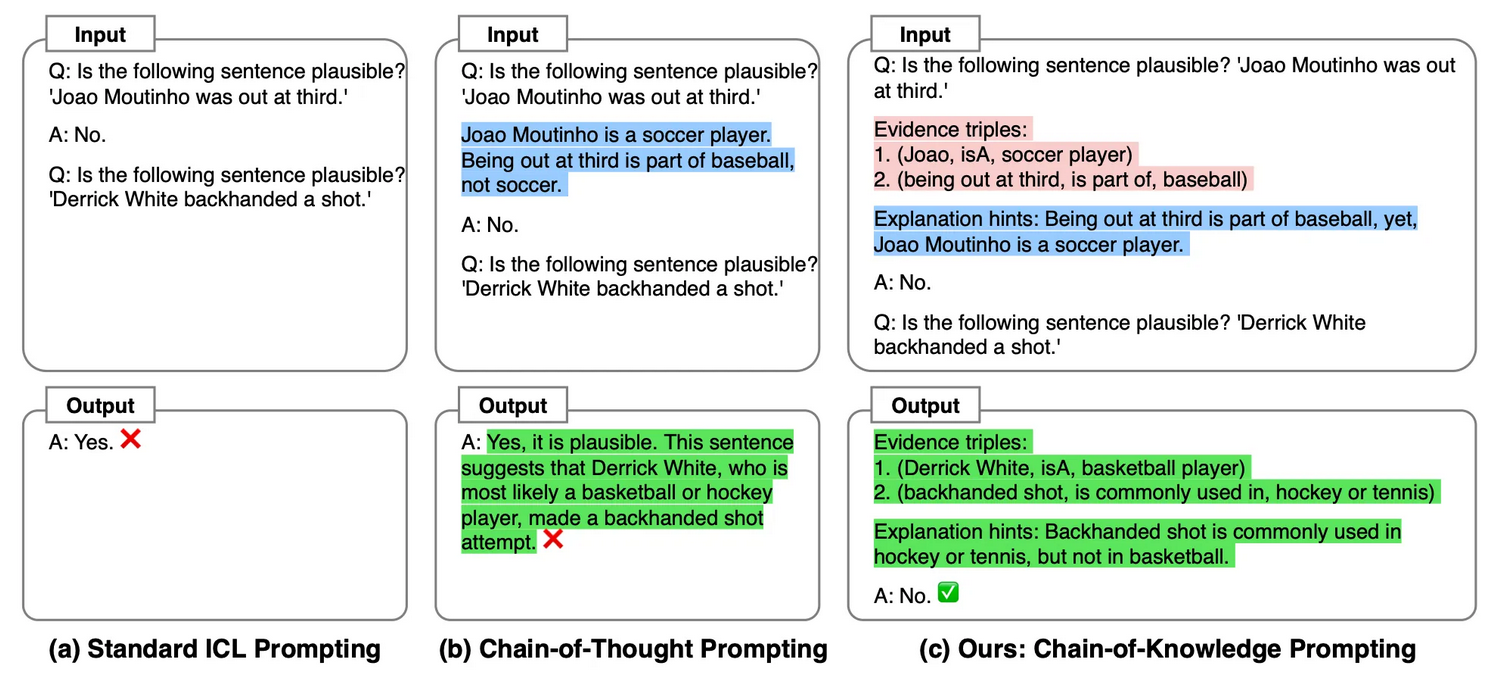

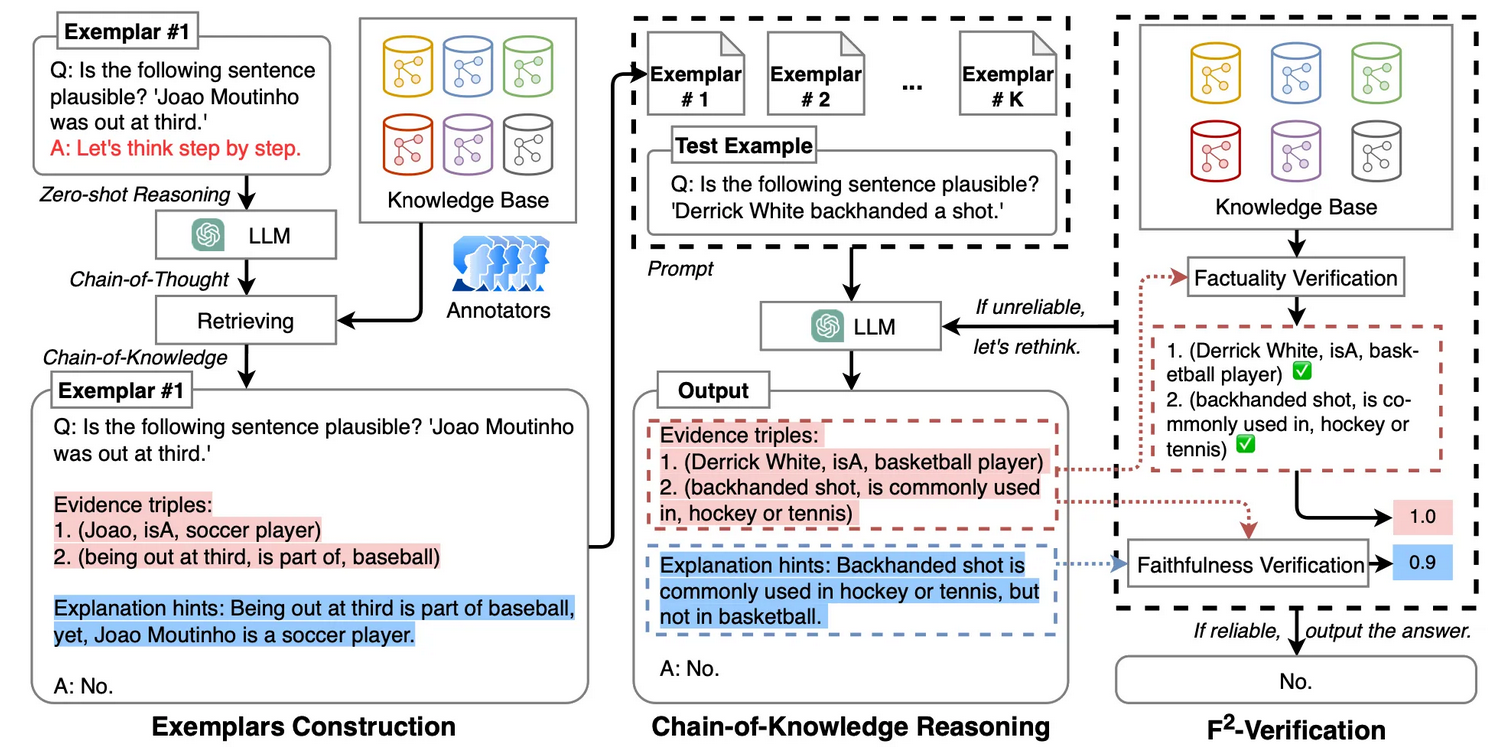

Chain-of-Knowledge Prompting

Chain-Of-Knowledge (CoK) is a new prompting strategy that is ideal for difficult reasoning problems. The difficulty with CoK prompting is that it requires sophistication when used in a production context.[14]Creating a working example of CoK is simple. The main issue of CoK prompting is accurately creating the Evidence Triples or Facts (CoK-ET).[14]

The study proposes a retrieval tool with access to a knowledge base. As a result, CoK can be viewed as an enabler for RAG implementations.[14]

Another problem is obtaining annotated CoK-ET that more accurately expresses the textual logic.[14]CoK is another study that demonstrates the necessity of In-Context Learning (ICL) of LLMs for inference.[14]

A recent study contends that Emergent Abilities are not hidden or unpublished model capabilities waiting to be discovered, but rather new approaches to In-Context Learning that are being developed.[14]

CoK is dependent on the quality of the data retrieved, as well as the importance of human annotation.[14]

There is also tremendous potential for expediting human-in-the-loop instruction adjustment with an AI-Accelerated data-centric studio.[14]

The CoK technique also incorporates a two-step verification process, which comprises factual and fidelity verification.[14]

How to do Chain-of-Knowledge Prompting

CoK-ET is a list of structure facts that contains the overall reasoning evidence and serves as a link between the question and the answer. CoK-EH is the explanation for this evidence.[14]

CoK can be viewed as a framework for creating correct and well-formed prompts for inference. As shown in the image above, CoK can be readily played with in a prompt playground by manually crafting prompts.[14]

It is clear that this strategy is only effective when a very simple prompt is required.[14]

Considerations with Chain-of-Knowledge Prompting

Inference time and token count can have a severe impact on both cost and user experience.[14]This approach is less opaque than gradient-based approaches, and transparency exists throughout the process, allowing for easy inspection at any stage of the process, as well as insights into what data was input and produced.[14]

Elements of CoK can be utilized without completing the entire strategy.[14]

CoK can also enable businesses to use smaller models due to the high degree of contextually relevant data.[14]

LLM Generated Self-Explanations

Recent research has discovered that, even when an LLM is educated on specific data, it fails to detect the relationship between previous LLM taught knowledge and the context at inference.[15]Hence the significance of what some term the Chain of X approach. When the LLM is asked to break a problem into a chain of thinking, it performs better at solving the task and recalling previously trained knowledge. LLMs can answer a question and explain how the conclusion was reached. When prompted, the LLM can disassemble their answer.[15]

The paper addressed two approaches of LLMs explaining their answer:

- Making a prediction and then explaining it.[15]

- Generating an explanation and using it to make a prediction.[15]

LLM-Generated Self-Explanations

The research discusses the systematic investigation of LLM-generated self-explanation in the context of sentiment analysis. This study focuses on unique prompting tactics and strategies.[15]

OpenAI review example[15]:

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{

"role": "system",

"content": "You are a creative and intelligent movie review analyst, whose purpose is to aid in sentiment analysis of movie reviews. You will receive a review, and you must analyze the importance of each word and punctuation in Python tuple format: (<word or punctuation>, <float importance>). Each word or punctuation is separated by a space. The importance should be a decimal number to three decimal places ranging from -1 to 1, with -1 implying a negative sentiment and 1 implying a positive sentiment. Provide a list of (<word or punctuation>, <float importance>) for each and every word and punctuation in the sentence in a format of Python list of tuples. Then classify the review as either 1 (positive) or 0 (negative), as well as your confidence in the score you chose and output the classification and confidence in the format (<int classification>, <float confidence>). The confidence should be a decimal number between 0 and 1, with 0 being the lowest confidence and 1 being the highest confidence.\nIt does not matter whether or not the sentence makes sense. Do your best given the sentence.\nThe movie review will be encapsulated within <review> tags. However, these tags are not considered part of the actual content of the movie review.\nExample output:\n[(<word or punctuation>, <float importance>), (<word or punctuation>, <float importance>), ... ] (<int classification>, <float confidence>)"

},

{

"role": "user",

"content": "<review> Offers that rare combination of entertainment and education . <review>"

},

{

"role": "assistant",

"content": "[('Offers', 0.500), ('that', 0.000), ('rare', 0.300), ('combination', 0.300), ('of', 0.000), ('entertainment', 0.800), ('and', 0.000), ('education', 0.500), ('.', 0.000)] (1, 0.800)"

}

],

temperature=1,

max_tokens=256,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)Prediction To Explanation (making a prediction and then explaining it)

The prompt below shows an example of the P-E configuration. Notice how the forecast comes first, followed by an explanation.[15]

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{

"role": "system",

"content": "You are a creative and intelligent movie review analyst, whose purpose is to aid in sentiment analysis of movie reviews. A review will be provided to you, and you must classify the review as either 1 (positive) or 0 (negative), as well as your confidence in the score you chose. The confidence should be a decimal number between 0 and 1, with 0 being the lowest confidence and 1 being the highest confidence. Output this in the Python tuple format (<int classification>, <float confidence>).\nThen, analyze how important every single word and punctuation token in the review was to your classification. The importance should be a decimal number to three decimal places ranging from -1 to 1, with -1 implying a negative sentiment and 1 implying a positive sentiment. Provide a list of (<word or punctuation>, <float importance>) for each and every word and punctuation token in the sentence in a format of Python list of tuples. Each word or punctuation is separated by a space.\nIt does not matter whether or not the sentence makes sense. Do your best given the sentence.\nThe movie review will be encapsulated within <review> tags. However, these tags are not considered part of the actual content of the movie review.\nExample output:\n(<int classification>, <float confidence>)\n[(<word or punctuation>, <float importance>), (<word or punctuation>, <float importance>), ... ]"

},

{

"role": "user",

"content": "<review> A film that takes you inside the rhythms of its subject : You experience it as you watch . <review>"

},

{

"role": "assistant",

"content": "(1, 0.8)\n[('A', 0.2), ('film', 0.3), ('that', 0.2), ('takes', 0.2), ('you', 0.4), ('inside', 0.2), ('the', 0.1), ('rhythms', 0.2), ('of', 0.2), ('its', 0.1), ('subject', 0.1), (':', 0.1), ('You', 0.4), ('experience', 0.3), ('it', 0.2), ('as', 0.2), ('watch', 0.2), ('.', 0.1)]"

}

],

temperature=1,

max_tokens=256,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)For anyone interested, here are the results obtained using Meta's llama7b-v2-chat.[15]

The prompt input[15]:

System: You are a creative and intelligent movie review analyst, whose purpose is to aid in sentiment analysis of movie reviews. A review will be provided to you, and you must classify the review as either 1 (positive) or 0 (negative), as well as your confidence in the score you chose. The confidence should be a decimal number between 0 and 1, with 0 being the lowest confidence and 1 being the highest confidence. Output this in the Python tuple format (<int classification>, <float confidence>).

Then, analyze how important every single word and punctuation token in the review was to your classification. The importance should be a decimal number to three decimal places ranging from -1 to 1, with -1 implying a negative sentiment and 1 implying a positive sentiment. Provide a list of (<word or punctuation>, <float importance>) for each and every word and punctuation token in the sentence in a format of Python list of tuples. Each word or punctuation is separated by a space.

It does not matter whether or not the sentence makes sense. Do your best given the sentence.

The movie review will be encapsulated within <review> tags. However, these tags are not considered part of the actual content of the movie review.

Example output:

(<int classification>, <float confidence>)

[(<word or punctuation>, <float importance>), (<word or punctuation>, <float importance>), ... ]

User: <review> A film that takes you inside the rhythms of its subject : You experience it as you watch . <review>

Assistant:And the LLMs response[15]:

Understood! I'm ready to analyze the movie review you provided. Here are the results:

(<int classification>, <float confidence>) = (0, 0.8) - Classification: Negative

Here are the importance scores for each word and punctuation token in the review:

[(<word or punctuation>, <float importance>), (the, -0.6), (film, 0.4), (that, -0.5), (takes, 0.3), (you, -0.2), (inside, 0.1), (the, -0.6), (rhythms, 0.3)]

Explanation:

The word "film" has a low importance score of -0.6 because it does not convey much sentiment towards either positive or negative emotions in the context of the review.

The word "that" has a low importance score of -0.5 because it is a transitional word that does not significantly impact the overall sentiment of the review negatively or positively . Its presence does not change how we classify this review as entirely negative . However , its absence might affect our classification; therefore we give it some weight . This implies that if more instances like this appear throughout a text , their impact will be less than if they were part of an entirely different kind of text . For example , "the film takes you inside" would have an opposite meaning than just saying "it takes you inside".) [1][3] )] )] ) ]]. * The phrase "You experience it as you watch" has an important score of 1 because It conveys positive emotion and could be interpreted as evidence that viewers will find watching this motion picture pleasurable and engaging .[2][4]) [5][6]; hence why we rank important words higher than unimportant phrases when deciding on their overall sentimental value within given texts). As such , we consider these particular elements crucial when evaluating whether something falls into one category versus another based solely upon how others might interpret them without additional context outside those given here.] Finally – although there may be other factors at play–by focusing solely on these particular pieces here allows us to better understand what makes certain sentences/phrases stand out amongst others!LLM models are trained using step-by-step thought data to ensure interoperability and correctness. For example, when asked to answer a math issue, models frequently provide comprehensive procedures, even when not explicitly taught to do so. Similarly, when asked to assess the sentiment of a movie or book review, LLMs frequently justify their conclusion with supporting evidence, such as emphasizing sentiment-laden terms or explaining sarcasm in the text.[15]

The two major findings of this study are[15]:

- Diversity in Explanations

- The study shows that we need new tools to identify better explanations, and that current evaluation methods may be insufficient to tell us which explanations are genuinely good or terrible.[15]

- The problem is measuring and evaluating explanations at scale; yet, breaking down responses for human examination remains useful.[15]

- The researchers examined several explanations and reasons generated by an LLM in both a one-shot and a few-shot setting.[15]

- When it comes to accuracy and faithfulness, no single explanation outperformed the rest.[15]

- Current methodologies may not be adequate, and the study proposes new approaches to discovering better explanations.[15]

2. Consistency in Model Predictions

- The model's values for its predictions, as well as the importance it attributed to specific terms.[15]

- The model produced values such as 0.25, 0.67, and 0.75, demonstrating no clear preference for certain numbers.[15]

- The closeness in explanation quality could be due to the model's lack of fine-grained variations in how confident it is in its predictions or the significance of particular phrases.[15]

- As a result, current evaluation criteria may be insufficient to discern between good and terrible explanations.[15]

- This is consistent with recent results that LLMs are becoming less opinionated and more neutral, hence striving to be less offending.[15]

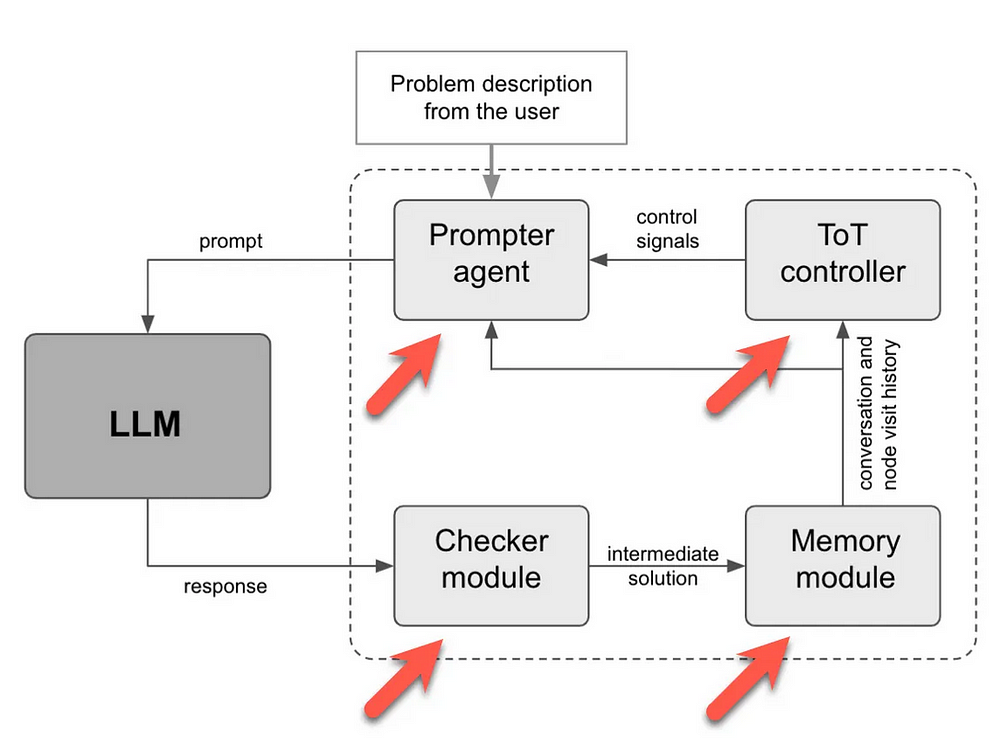

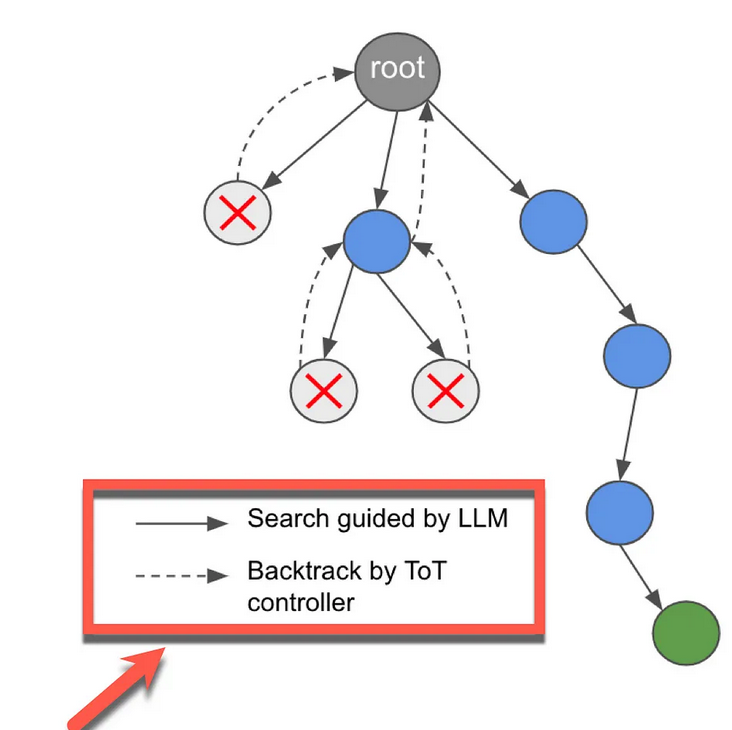

LLM Guided Tree-of-Thought

The Three-of-Thought (ToT) technique is inspired by the way human minds tackle hard reasoning tasks through trial and error. In this approach, the mind explores the solution space via a tree-like thought process, allowing for retracing as needed.[16]As shown in the image below. In the LLM model Guided Tree-of-Thought approach, the LLM remains the autonomous agent's backbone. However, the LLM is enhanced with the following components [16]:

When solving a problem, the modules have a multi-round conversation with the LLM. This is typical of LLM-based autonomous agents, which generate a chain on the fly and execute it sequentially while polling the LLM several times.[16]

The paper identifies a vulnerability in that LLM generation is predicated on the preceding sequence, with backward editing being missed. However, when we humans solve a problem, we most likely go back to prior iterations if the derived step is erroneous. This strategy of retracing eliminates the possibility of the LLM reaching an inconclusive or no-answer circumstance.[16]

This technique solves an issue using a multi-round interaction between the LLM and the prompter agent. [https://arxiv.org/pdf/2305.08291.pdf]

Example of ToT implementation in Langchain[16]:

from langchain_experimental.tot.base import ToTChain

class MyChecker(ToTChecker):

def evaluate(self, problem_description: str, thoughts: Tuple[str, ...] = ()) -> ThoughtValidity:

last_thought = thoughts[-1]

clean_solution = last_thought.replace(" ", "").replace('"', "")

regex_solution = clean_solution.replace("*", ".").replace("|", "\\|")

if sudoku_solution in clean_solution:

return ThoughtValidity.VALID_FINAL

elif re.search(regex_solution, sudoku_solution):

return ThoughtValidity.VALID_INTERMEDIATE

else:

return ThoughtValidity.INVALID

tot_chain = ToTChain(llm=llm, checker=MyChecker(), k=30, c=5, verbose=True, verbose_llm=False)

tot_chain.run(problem_description=problem_description)Resources

[1] Cobus Greyling, (8 February 2024), A Benchmark for Verifying Chain-Of-Thought:

A Chain-of-Thought is only as strong as its weakest link; a recent study from Google Research created a benchmark for…cobusgreyling.medium.com

[2] Cobus Greyling, (8 February 2024), Jan 24, 2024:

Traditional CoT comes at a cost of increased output token usage, CCoT prompting is a prompt-engineering technique which…cobusgreyling.medium.com

[3] Cobus Greyling, (Jan 8, 2024), Random Chain-Of-Thought For LLMs & Distilling Self-Evaluation Capability:

Here I discuss the five emerging architectural principles for LLM implementations & how curation & enrichment of…cobusgreyling.medium.com

[4] Cobus Greyling, (Feb 12, 2024), Beyond Chain-of-Thought LLM Reasoning:

This approach can be implemented on a prompt level and does not require any dedicated frameworks or pre-processing.cobusgreyling.medium.com

[5] Cobus Greyling, (Jan 29, 2024), Chain-of-Symbol Prompting (CoS) For Large Language Models:

LLMs need to understand a virtual spatial environment described through natural language while planning & achieving…cobusgreyling.medium.com

[6] Cobus Greyling, (Jan 12, 2024), Chain Of Natural Language Inference (CoNLI):

Hallucination is categorised into subcategories of Context-Free Hallucination, Ungrounded Hallucination &…cobusgreyling.medium.com

[7] Cobus Greyling, (Jan 5, 2024), Active Prompting with Chain-of-Thought for Large Language Models:

By using AI accelerated human annotation this framework removes uncertainty and introduces reliability via a…cobusgreyling.medium.com

[8] Cobus Greyling, (15 December 2023), Prompt Pipelines:

LLM-based applications can take the form of autonomous agents, prompt chaining or prompt pipelines. These approaches…cobusgreyling.medium.com

[9] Cobus Greyling, (18 December 2023), Intelligent & Programable Prompt Pipelines From Haystack:

Considering LLM-based Generative Apps architectures, the leap from prompt chaining to autonomous agents are too big…cobusgreyling.medium.com

[10] Cobus Greyling, (Jul 27, 2023), Least To Most Prompting:

Least To Most Prompting for Large Language Models (LLMs) enables the LLM to handle complex reasoning.cobusgreyling.medium.com

[11] Cobus Greyling, (Jun 1, 2023), Supervised Chain-Of-Thought Reasoning Mitigates LLM Hallucination:

Large Language Model (LLM) results significantly improved by implementing natural language reasoning.cobusgreyling.medium.com

[12] Cobus Greyling, (Dec 4, 2023), Self-Consistency For Chain-Of-Thought Prompting:

The paper on Self-Consistency Prompting was published 7 March 2023, as an improvement on Chain-Of-Thought Prompting. At…cobusgreyling.medium.com

[13] Cobus Greyling, (Oct 5, 2023), Chain-Of-Verification Reduces Hallucination in LLMs:

A recent study highlighted a new approach (CoVe) to address LLM hallucination via a novel implementation of prompt…cobusgreyling.medium.com

[14] Cobus Greyling, (Nov 22, 2023), Chain-Of-Knowledge Prompting

Chain-Of-Knowledge (CoK) is a new prompting technique which is well suited for complex reasoning tasks.cobusgreyling.medium.com

[15] Cobus Greyling, (Dec 21, 2023), LLM-Generated Self-Explanations:

The main contribution of this recently published study is an investigation into the strengths and weaknesses of LLM’s…cobusgreyling.medium.com

[16] Cobus Greyling, (Sep 13, 2023), LangChain, LangSmith & LLM Guided Tree-of-Thought:

The Three-of-Thought (ToT) technique takes inspiration from the way human minds solve complex reasoning tasks by trial…cobusgreyling.medium.com

0 Comments