https://cbarkinozer.medium.com/b%C3%BCy%C3%BCk-eylem-modelleri-lam-nedir-2abb6ce32674

Let’s learn about the latest generative AI trend: Large Action Models.

Action: An action, step, process, or function that an agent performs given a particular state.

Function Calling: It is the process of selecting the most similar function descriptions according to the incoming input and calling that function. It enables agents to perform actions beyond the capabilities of their models.

What is a Large Action Model?

The large action model (LAM) is a groundbreaking AI paradigm that enables systems to understand natural language and act on behalf of its users. It learns from human demos, observes how we use various programs and interfaces, and then replicates and executes them consistently and quickly. [1]

The large action paradigm is beneficial since it eliminates the need for us to download and use numerous apps on our devices, instead performing actions on those apps for us.[1]

It might be a full-fledged operating system that runs on a custom-built device and provides access and control over any web app. LAM can also handle complex activities with multiple stages and programs without asking you to define every detail or execute additional instructions. LAM aims to be more proactive, intelligent, and efficient than existing voice assistants.[1]

LAMs act as independent ‘agents’ capable of performing tasks and making judgments. These models, created for specific applications and human behaviors, use neuro-symbolic programming to easily replicate a wide range of actions, eliminating the need for early demonstrations. LAMs interact with the real world by interacting with other systems, such as IoT devices, allowing them to carry out physical tasks, operate devices, retrieve data, and modify information. They can comprehend complex human goals stated in normal language, adapt to changing circumstances, and work with other LAMs. LAMs have important applications in healthcare, finance, and automotive, where they can help with diagnostics, risk measurement, and the development of self-governing vehicles.[2]

LAMs became a buzzword after Rabbit unveiled their LAM device R1 at CES 2024. The Rabbit R1 (priced at $199) is a small, palm-sized device that can execute basic activities better than a smartphone, hence improving the digital experience. The Rabbit r1 device, which runs Rabbit OS and has a LAM, serves as an AI assistant, collecting photographs and videos and communicating with users intuitively. Rabbit R1 can edit, save, and send spreadsheets to the recipients you designate. It may also manage your social media accounts by creating and posting desired content across many networks. LAMs are poised to play an important role in the future of AI by converting language models into real-time action partners. Real-world applications like Rabbit demonstrate the power of LAMs to transform human interaction and affect the future of AI.[2]

LAM vs LLM

Pros of LAMs:

- Can interpret language and take action in the real world.[6]

- Physical interactions and experiences promote learning.[6]

- Applies to a variety of tasks that require both language and action.[6]

Cons of LAMs:

- More difficult to train than LLMs.[6]

- Training and deployment may demand additional computational resources.[6]

- Limited reasoning and planning abilities in complex situations.[6]

Task Execution

LLMs are typically designed to produce human-like text from input data. They excel in natural language comprehension and text generation but are incapable of carrying out tasks or actions. LAMs, on the other hand, are designed to go beyond text generation. They act as autonomous agents, able to complete tasks, make decisions, and interact with the physical world.[2]

Autonomy

LLMs generate text answers based on patterns learned during training, but they are unable to perform actions or make decisions beyond text production. LAMs are capable of independent behavior. They can communicate with other systems, control devices, access data, and modify information, allowing them to execute complex tasks without requiring human intervention.[2]

Integration with External Systems

LLMs typically operate within a closed system, with no direct integration with other systems or devices. LAMs interact with the physical world by establishing connections with other systems, such as IoT devices. This enables people to engage in physical activities and communicate with their environment in ways that are not restricted to textual communication.[2]

Goal-Oriented Interaction

LLMs are primarily concerned with producing logical and contextually relevant text given on input cues, but they do not take a goal-oriented approach to jobs. LAMs are designed to read complex human goals expressed in natural language and translate them into executable tasks. They can respond in real time and adapt to changing situations.[2]

Applications

LLMs are commonly used for natural language understanding, text generation, and other language-related activities. In contrast, LAMs are employed in a range of industries, including healthcare, finance, and automotive, where their capacity to complete tasks has practical ramifications. LAMs, for example, can aid with diagnostics, risk assessment, and potentially autonomous vehicle operation.[2]

In short, while LLMs excel at language-related activities and text generation, LAMs go above and beyond by combining language competency with the ability to complete tasks and make autonomous judgments, resulting in a significant advancement in the field of artificial intelligence.[2]

Challenges and the Birth of LAMs

The transition to natural language-driven interfaces has been hampered by a shortage of application programming interfaces (APIs) from major service providers. To solve this problem, the rabbit inc. team used neuro-symbolic programming, which allowed them to directly simulate user interactions with programs. This innovation eliminates the requirement for temporary representations such as raw text, rasterized graphics, or tokenized sequences.[4]

Traditional neural language models struggle to grasp the structured nature of human-computer interactions in applications. The LAM technique, based on a hybrid neuro-symbolic model, solves this problem by combining the advantages of symbolic algorithms and neural networks.[4]

Learning Actions by Demonstration

The LAM method employs a novel approach to learning behaviors known as imitation or learning by example. It observes human interactions with interfaces and mimics them consistently and quickly. Unlike black-box models, LAM’s “recipe” is visible, allowing technically qualified individuals to examine and comprehend its inner workings. LAM gradually accumulates knowledge from demos, resulting in a conceptual blueprint of the underlying services supplied by applications.[4]

Responsible and Reliable AI Execution

LAM does not work in isolation; it requires platforms to plan and manage its routines effectively. Furthermore, ethical AI implementation requires that LAM interact with programs in a humanizing and respectful manner. The Rabbit Research Team created a specific cluster of virtualized environments for testing and production that prioritizes security and scalability.[4]

Outlook and Future Prospects.

The development of Large Action Models marks a significant step toward more intuitive and practical natural language-powered systems and gadgets. The Rabbit Research Team’s commitment to responsible deployment ensures that LAM generates efficient and indistinguishable behaviors from human behavior, making it a promising tool for the future of human-machine interactions.[4]

Use Cases of LAMs

1. Healthcare:

- Diagnostics: LAMs can analyze medical data, such as imaging scans, to help diagnose diseases.[2]

- Treatment Strategy: LAMs can make individualized therapy recommendations based on patient data and medical knowledge.[2]

2. Finance:

- Risk Measurement: LAMs may identify and analyze financial risks, offering valuable information for investment decisions.[2]

- Fraud Detection: LAMs can detect patterns indicative of fraudulent activity in financial transactions.[2]

3. Automotive:

- Self-Driving Vehicles: LAMs can operate and navigate self-driving vehicles while making real-time judgments based on environmental data.[2]

- Vehicle Safety Systems: LAMs can improve safety characteristics by processing sensor data and implementing preventive measures.[2]

4. Education:

- Personalized Learning: LAMs can customize educational content and tactics according on each student’s performance and needs.[2]

- Language Translation: LAMs can help translate educational materials into multiple languages.[2]

5. Customer Service:

- Automated Support: LAMs can offer automated customer service by interpreting and responding to user inquiries.[2]

- Issue Resolution: LAMs can diagnose issues and guide consumers through the resolution process.[2]

6. Home Automation:

- Smart Home Control: LAMs can manage smart home equipment, altering temperature, lighting, and security systems in response to user preferences.[2]

- Virtual Assistants: LAMs can function as sophisticated virtual assistants by setting reminders, sending messages, and managing schedules.[2]

7. Manufacturing:

- Quality Control: LAMs can use visual data from production processes to detect flaws and ensure product quality.[2]

- Supply Chain Optimization: LAMs can optimize supply chain processes by evaluating data and recommending efficiency improvements.[2]

8. Research and Development:

- Data Analysis: LAMs can handle massive datasets, extract relevant insights, and help with research projects.[2]

- Innovation Support: LAMs can produce ideas and recommendations for innovation based on input parameters.[2]

9. Entertainment:

- Content Creation: LAMs can help you generate creative stuff like writing, art, and music.[2]

- Interactive Storytelling: LAMs can provide dynamic and interactive storytelling experiences.[2]

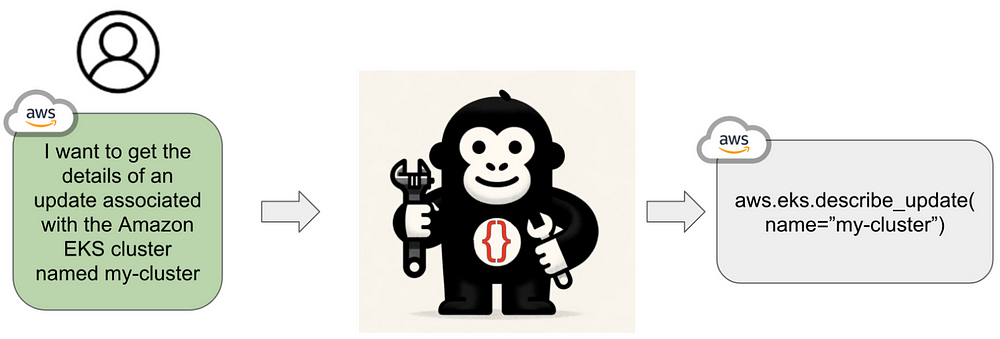

Open Source Large Action Models

- Gorilla: Large Language Model Connected with Massive APIs Project, Github, Paper, HF Model v1 [6]

- ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs Paper, Github, HF Model v1, HF Model v2 [6]

- Empowering LLM to use Smartphone for Intelligent Task Automation Project, Paper, Github [6]

- MetaTool Benchmark: Deciding Whether to Use Tools and Which to Use Paper, Github [6]

- T-Eval: Evaluating the Tool Utilization Capability Step by Step Project Paper, Github, Dataset, Leaderboard [6]

The Road Ahead

There’s no doubt that LAMs will become incredibly adept at the kind of fluency and communication required in many of the examples above. However, it is not guaranteed that they will perform predictably, effectively, and consistently enough to be used in the real world.[7]

Trust is a difficulty for developing language and visuals, but it becomes even more difficult when taking action. The challenge of guaranteeing safety and reliability only increases when numerous LAMs collaborate. As a result, I feel it is crucial that, even when operating independently, LAMs keep people informed before taking critical actions. As technology advances, it remains a tool that humans may freely manipulate.[7]

References

[1] Sarath Mohan, (18 January 2024), Large action model Rabbit R1:

I'm super excited to share with you something that I've been following for a while, and I think it has the potential to…www.linkedin.com

[2] Abhishek Singh, (24 January 2024), Large Language Models to Large Action Models — Step towards Artificial General Intelligence:

It's almost end of January, and I am still getting Happy New Year messages on WhatsApp and I still feel that I haven't…www.linkedin.com

[3] Rosemary J Thomas, PhD, (Jan 16, 2024), The Rise of Large Action Models, LAMs: How AI Can Understand and Execute Human Intentions?

A hot topic and development in the realm artificial intelligence (AI) is Large Action Models, also referred as Large…medium.com

[4] Tod Kuntzelman, (12 January 2024), Beyond LLMs: The Rise of Large Action Models (LAMs) — A Paradigm Shift in Generative AI:

Large Action Models (LAMs), a revolutionary system designed to infer and model human actions on computer applications…www.linkedin.com

[5] Ajay Verma, (Jan 15, 2024), LLM vs LAM: A Comparative Analysis:

Large Language Models (LLMs) and Large Action Models (LAMs) are two types of artificial intelligence (AI) models that…ai.plainenglish.io

[6] tjtanaa /awesome-large-action-model , Awesome Large Action Model (LAM): Models that could help gets things done:

Awesome Large Action Model (LAM): Models that could help gets things done. - tjtanaa/awesome-large-action-modelgithub.com

[7] Silvio Savarese, Toward Actionable Generative AI:

LAMs: From Large Language Models to Large Action Models There’s no question that we’re living in the era of generative…blog.salesforceairesearch.com

0 Comments