This is the Turkish summary of Deeplearning.ai's "AI Agency Design Patterns with AutoGen" course.

Microsoft Autgen is a multi-agent conversational framework that allows you to quickly develop many agents with varied roles, personas, duties, and capabilities for implementing sophisticated AI applications utilizing various AI agentic design patterns. You will learn about sequential multi-agent processes. You will also create management agents who will coordinate the efforts of other agents. You will also create customized tools for agents to use. You'll also learn about agents who can create the necessary code.

Multi-Agent Conversation and Stand-up Comedy

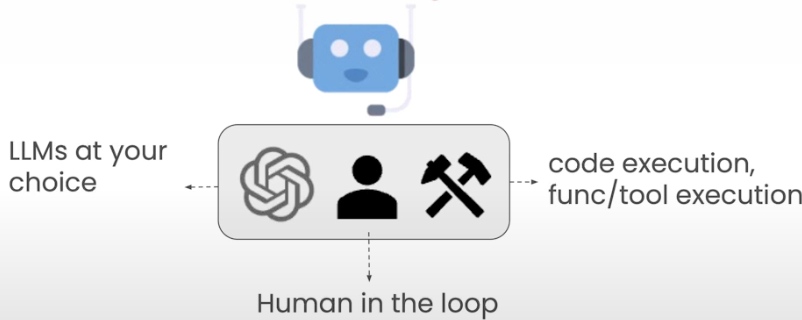

Let's create a 2-agent chat scenario. Autogen includes a built-in agent class called ConversableAgent, which unifies many forms of agents under the same programming concept. This class includes many built-in functions. For example, you can construct replies using a list of LLM options, or we can execute code, functions, and tools. It also has features for keeping the person in the loop and detecting when the response should end. Each component can be turned on and off and customized to meet the needs of your application.

from utils import get_openai_api_key

OPENAI_API_KEY = get_openai_api_key()

llm_config = {"model": "gpt-3.5-turbo"}Defining an Autogen agent

from autogen import ConversableAgent

agent = ConversableAgent(

name="chatbot",

llm_config=llm_config,

human_input_mode="NEVER", # Agent NEVER (or ALWAYS) seeks for human input

)

reply = agent.generate_reply(

messages=[{"content": "Tell me a joke.", "role": "user"}]

)

print(reply)

reply = agent.generate_reply(

messages=[{"content": "Repeat the joke.", "role": "user"}]

)

print(reply)Conversation

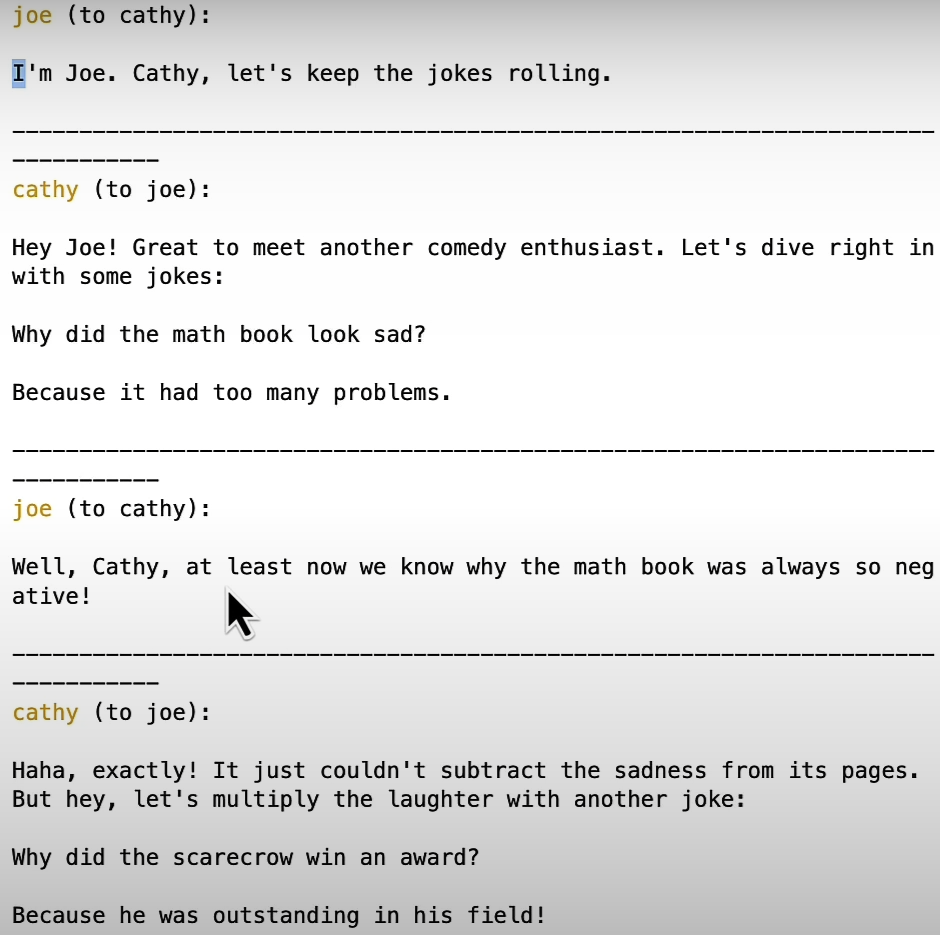

Creating a conversation between two agents, Cathy and Joe, in which the memory of their exchanges is preserved.

cathy = ConversableAgent(

name="cathy",

system_message=

"Your name is Cathy and you are a stand-up comedian.",

llm_config=llm_config,

human_input_mode="NEVER",

)

joe = ConversableAgent(

name="joe",

system_message=

"Your name is Joe and you are a stand-up comedian. "

"Start the next joke from the punchline of the previous joke.",

llm_config=llm_config,

human_input_mode="NEVER",

)

# agent conversation definition

chat_result = joe.initiate_chat(

recipient=cathy,

message="I'm Joe. Cathy, let's keep the jokes rolling.",

max_turns=2,

)

You can print the chat history, cost, and summary of discussions.

import pprint

pprint.pprint(chat_result.chat_history)

pprint.pprint(chat_result.cost)

pprint.pprint(chat_result.summary)

Get a better summary of the conversation

After the summary method is completed, the LLM is called with the summary prompt, and it will reflect on the conversation and generate a fresh summary.

chat_result = joe.initiate_chat(

cathy,

message="I'm Joe. Cathy, let's keep the jokes rolling.",

max_turns=2,

summary_method="reflection_with_llm", # Set your summary method

summary_prompt="Summarize the conversation",

)

pprint.pprint(chat_result.summary)

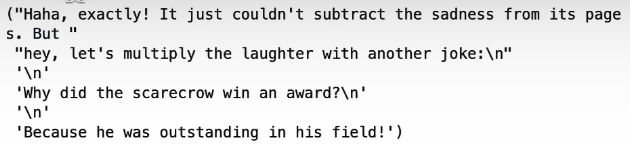

Chat Termination

Chat can be canceled using the termination conditions. For example, in the code below, we instruct Cathy to conclude the chat when she is ready by saying "I gotta go". And we include this statement as a termination condition. The agent is listened to, and when the phrase "I gotta go" is said, the execution ends.

cathy = ConversableAgent(

name="cathy",

system_message=

"Your name is Cathy and you are a stand-up comedian. "

"When you're ready to end the conversation, say 'I gotta go'.",

llm_config=llm_config,

human_input_mode="NEVER",

is_termination_msg=lambda msg: "I gotta go" in msg["content"],

)

joe = ConversableAgent(

name="joe",

system_message=

"Your name is Joe and you are a stand-up comedian. "

"When you're ready to end the conversation, say 'I gotta go'.",

llm_config=llm_config,

human_input_mode="NEVER",

is_termination_msg=lambda msg: "I gotta go" in msg["content"] or "Goodbye" in msg["content"],

)

chat_result = joe.initiate_chat(

recipient=cathy,

message="I'm Joe. Cathy, let's keep the jokes rolling."

)

cathy.send(message="What's last joke we talked about?", recipient=joe) # To check memory

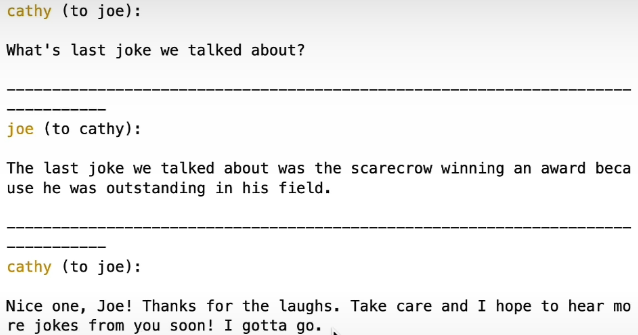

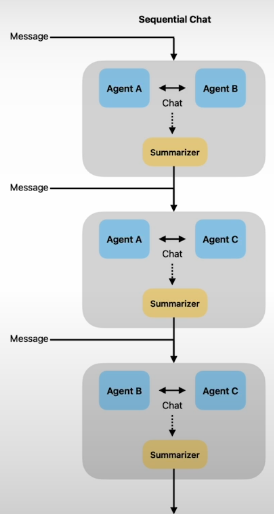

Sequential Chats and Customer Onboarding

Let's create a multi-agent system that works together to give an enjoyable customer onboarding experience for a product. We'll also look at how humans can get involved in an AI system's loop.

In this case, a typical approach is to first get some consumer information, then assess the customer's interests, and finally engage with the customer depending on the information gathered. It is advisable to divide this client onboarding process into three sub-tasks: information gathering, interest surveys, and customer engagement.

llm_config={"model": "gpt-3.5-turbo"}

from autogen import ConversableAgentCreating the needed agents

onboarding_personal_information_agent = ConversableAgent(

name="Onboarding Personal Information Agent",

system_message='''You are a helpful customer onboarding agent,

you are here to help new customers get started with our product.

Your job is to gather customer's name and location.

Do not ask for other information. Return 'TERMINATE'

when you have gathered all the information.''',

llm_config=llm_config,

code_execution_config=False,

human_input_mode="NEVER",

)

onboarding_topic_preference_agent = ConversableAgent(

name="Onboarding Topic preference Agent",

system_message='''You are a helpful customer onboarding agent,

you are here to help new customers get started with our product.

Your job is to gather customer's preferences on news topics.

Do not ask for other information.

Return 'TERMINATE' when you have gathered all the information.''',

llm_config=llm_config,

code_execution_config=False,

human_input_mode="NEVER",

)

customer_engagement_agent = ConversableAgent(

name="Customer Engagement Agent",

system_message='''You are a helpful customer service agent

here to provide fun for the customer based on the user's

personal information and topic preferences.

This could include fun facts, jokes, or interesting stories.

Make sure to make it engaging and fun!

Return 'TERMINATE' when you are done.''',

llm_config=llm_config,

code_execution_config=False,

human_input_mode="NEVER",

is_termination_msg=lambda msg: "terminate" in msg.get("content").lower(),

)

customer_proxy_agent = ConversableAgent(

name="customer_proxy_agent",

llm_config=False,

code_execution_config=False,

human_input_mode="ALWAYS",

is_termination_msg=lambda msg: "terminate" in msg.get("content").lower(),

)Creating tasks

Now you may create a series of assignments to help with the onboarding process. Each chat is essentially a two-agent chat, with one onboarding agent and one custom proxy agent. In each chat, the sender agent sends an initial message to the recipient to begin the conversation, and they will then engage in a back-and-forth conversation until the maximum number of turns is achieved or the termination message is received. A sequential structure requires one task output before proceeding to the next task input. As a result, summarizing is performed using a summary method. The summary prompt is also designed to guide the LLM on how to complete the summary.

chats = [

{

"sender": onboarding_personal_information_agent,

"recipient": customer_proxy_agent,

"message":

"Hello, I'm here to help you get started with our product."

"Could you tell me your name and location?",

"summary_method": "reflection_with_llm",

"summary_args": {

"summary_prompt" : "Return the customer information "

"into as JSON object only: "

"{'name': '', 'location': ''}",

},

"max_turns": 2,

"clear_history" : True

},

{

"sender": onboarding_topic_preference_agent,

"recipient": customer_proxy_agent,

"message":

"Great! Could you tell me what topics you are "

"interested in reading about?",

"summary_method": "reflection_with_llm",

"max_turns": 1,

"clear_history" : False

},

{

"sender": customer_proxy_agent,

"recipient": customer_engagement_agent,

"message": "Let's find something fun to read.",

"max_turns": 1,

"summary_method": "reflection_with_llm",

},

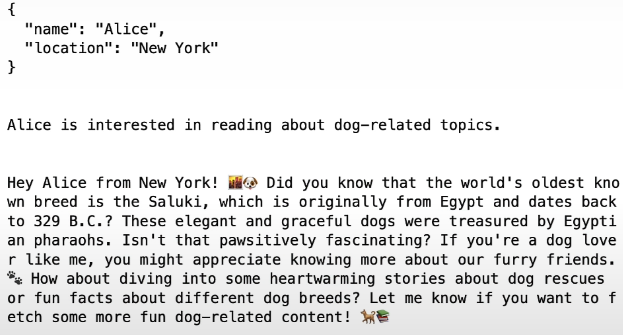

]Start the onboarding process

You might receive a slightly different response than shown in the video. Feel free to experiment with other inputs, such as name, location, and preferences.

from autogen import initiate_chats

chat_results = initiate_chats(chats)

for chat_result in chat_results:

print(chat_result.summary)

print("\n")

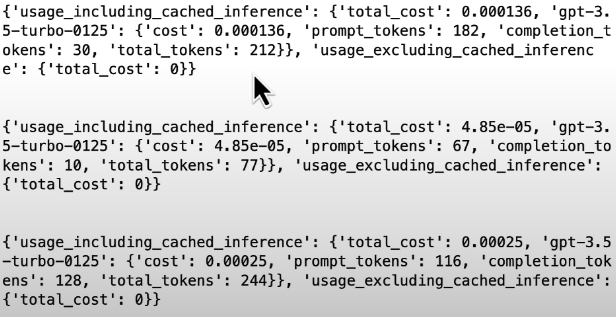

for chat_result in chat_results:

print(chat_result.cost)

print("\n")

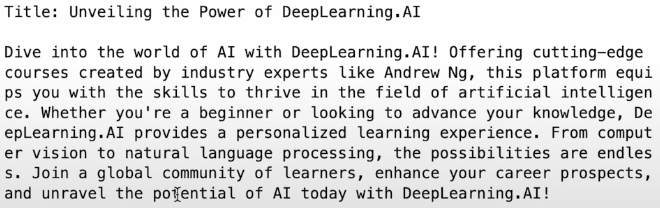

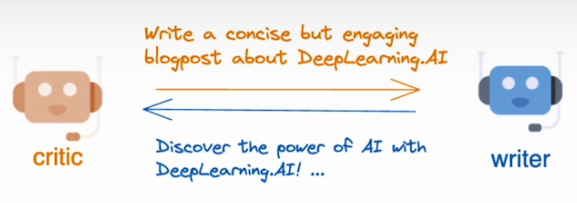

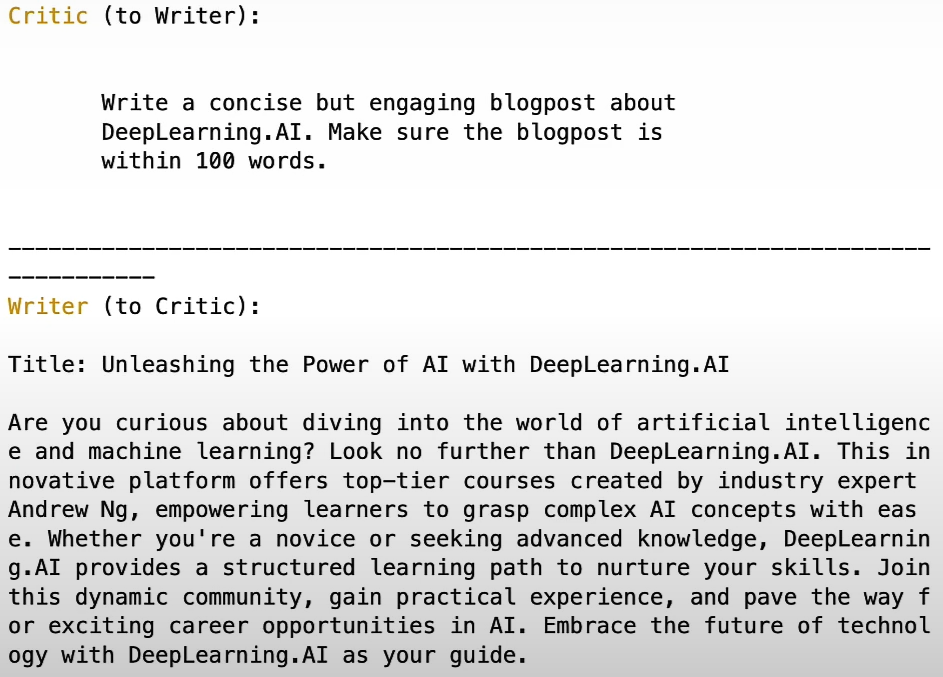

Reflection and Blogpost Writing

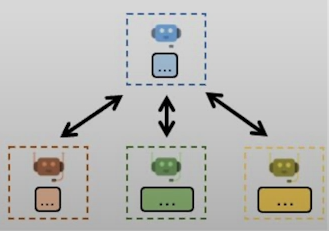

We will learn the agent reflection framework and use it to write a high-quality blog post. We will also learn how to use the nested chat conversation structure to carry out a complicated reflection process. You will be able to create a system in which a group of reviewer agents is nested within a querying agent, as shown in the monologue, to reflect on a blog post produced by a writer agent.

llm_config = {"model": "gpt-3.5-turbo"}

task = '''

Write a concise but engaging blogpost about

DeepLearning.AI. Make sure the blogpost is

within 100 words.

'''Create a writer agent

import autogen

writer = autogen.AssistantAgent(

name="Writer",

system_message="You are a writer. You write engaging and concise "

"blogpost (with title) on given topics. You must polish your "

"writing based on the feedback you receive and give a refined "

"version. Only return your final work without additional comments.",

llm_config=llm_config,

)

reply = writer.generate_reply(messages=[{"content": task, "role": "user"}])

print(reply)

Adding reflection

One approach here is to use reflection, which is a well-known and effective agentic design pattern. One technique to achieve reflection is to have another agent reflect on and help enhance the work. Create a critic agent to evaluate the work of the writer agent. We will request that a critical agent analyze the writer's writing and provide criticism. We can initiate a communication between these two agents.

critic = autogen.AssistantAgent(

name="Critic",

is_termination_msg=lambda x: x.get("content", "").find("TERMINATE") >= 0,

llm_config=llm_config,

system_message="You are a critic. You review the work of "

"the writer and provide constructive "

"feedback to help improve the quality of the content.",

)

res = critic.initiate_chat(

recipient=writer,

message=task,

max_turns=2,

summary_method="last_msg"

)

Nested chat

In many circumstances, they aim to implement a more complicated reflection workflow, such as the critic agent's monologue. For example, they want to ensure that the critic agent offers feedback on certain components of the work. For example, whether the content will rank high in search engines, draw organic traffic, have legal or ethical issues, and so on. Let's explore how we can use nested chat to deal with all this. A nested chat is essentially a chat that serves as an agent's inner monologue.

SEO_reviewer = autogen.AssistantAgent(

name="SEO Reviewer",

llm_config=llm_config,

system_message="You are an SEO reviewer, known for "

"your ability to optimize content for search engines, "

"ensuring that it ranks well and attracts organic traffic. "

"Make sure your suggestion is concise (within 3 bullet points), "

"concrete and to the point. "

"Begin the review by stating your role.",

)

legal_reviewer = autogen.AssistantAgent(

name="Legal Reviewer",

llm_config=llm_config,

system_message="You are a legal reviewer, known for "

"your ability to ensure that content is legally compliant "

"and free from any potential legal issues. "

"Make sure your suggestion is concise (within 3 bullet points), "

"concrete and to the point. "

"Begin the review by stating your role.",

)

ethics_reviewer = autogen.AssistantAgent(

name="Ethics Reviewer",

llm_config=llm_config,

system_message="You are an ethics reviewer, known for "

"your ability to ensure that content is ethically sound "

"and free from any potential ethical issues. "

"Make sure your suggestion is concise (within 3 bullet points), "

"concrete and to the point. "

"Begin the review by stating your role. ",

)

meta_reviewer = autogen.AssistantAgent(

name="Meta Reviewer",

llm_config=llm_config,

system_message="You are a meta reviewer, you aggragate and review "

"the work of other reviewers and give a final suggestion on the content.",

)The next step is to specify the task to be registered. Here, we will apply the sequential chat discussion pattern taught in the previous lesson to create a series of conversations between the critic and the reviewers.

Orchestrate the nested chats to solve the task

We effectively have a list of four chats. Each of them involves a specific reviewer as the recipient. Later, we will register this chat list with the critic agency. As a result, the critic agent will be used as a centre by default, and no additional syntax is required.

For the first three chat conversations, we utilized an LLM to create a summary in the appropriate format. As a result, reflection_with_llm is shown as a summary_method. In addition, summary_prompt is provided so that each of these reviewers can provide the review in JSON format, which includes a field of reviewer and a field of review. In all of these conversation sessions, we said that the maximum number of turns would be one. We also need to appropriately configure the first message so that the nested reviewers may access the content to be reviewed. One frequent method is to extract information from the auto-chat session summary. This is why we define an initial message as a function reflection message.

# Gather summary from the agents in the ato chat session

def reflection_message(recipient, messages, sender, config):

return f'''Review the following content.

\n\n {recipient.chat_messages_for_summary(sender)[-1]['content']}'''

review_chats = [

{

"recipient": SEO_reviewer,

"message": reflection_message,

"summary_method": "reflection_with_llm",

"summary_args": {"summary_prompt" :

"Return review into as JSON object only:"

"{'Reviewer': '', 'Review': ''}. Here Reviewer should be your role",},

"max_turns": 1},

{

"recipient": legal_reviewer, "message": reflection_message,

"summary_method": "reflection_with_llm",

"summary_args": {"summary_prompt" :

"Return review into as JSON object only:"

"{'Reviewer': '', 'Review': ''}.",},

"max_turns": 1},

{"recipient": ethics_reviewer, "message": reflection_message,

"summary_method": "reflection_with_llm",

"summary_args": {"summary_prompt" :

"Return review into as JSON object only:"

"{'reviewer': '', 'review': ''}",},

"max_turns": 1},

{"recipient": meta_reviewer,

"message": "Aggregrate feedback from all reviewers and give final suggestions on the writing.",

"max_turns": 1},

]When a message is received from the trigger agent, it is immediately sent to this nested chat session for thoughtful consideration.

critic.register_nested_chats(

review_chats,

trigger=writer, #

)

res = critic.initiate_chat(

recipient=writer,

message=task,

max_turns=2,

summary_method="last_msg"

)

print(res.summary)Tool Use and Conversational Chess

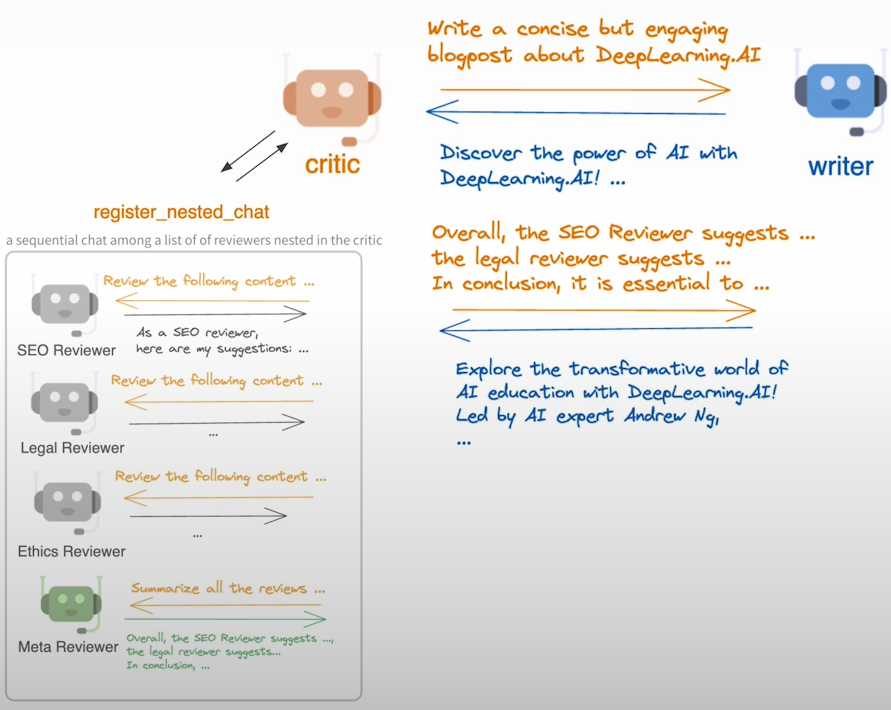

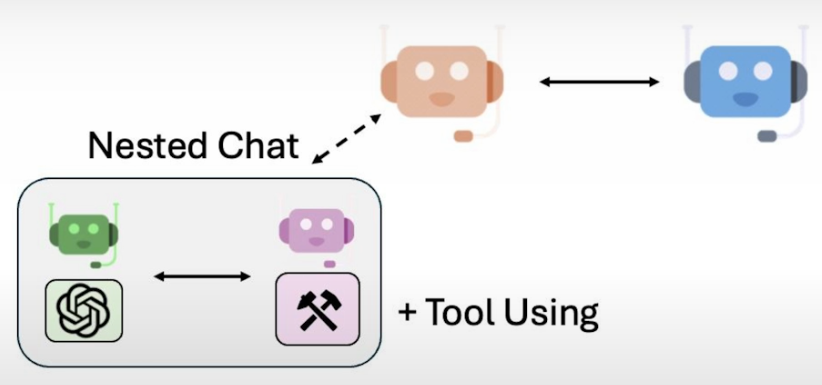

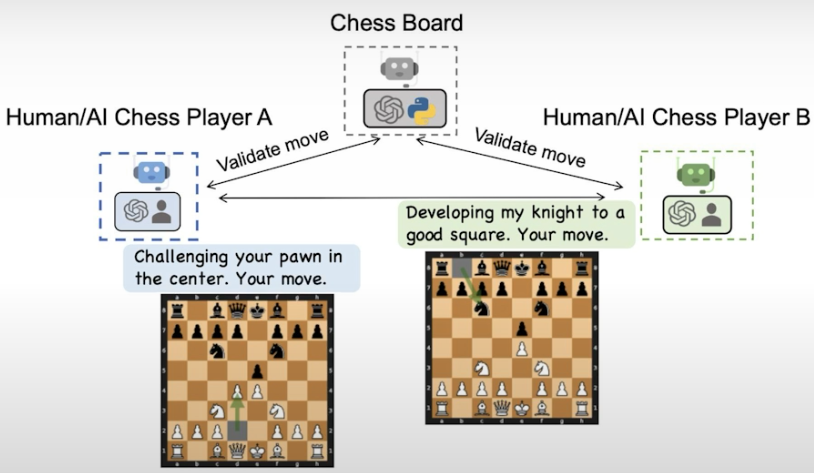

We will create a conversational chess game to demonstrate a technology used in nested agent talks. Both player agents can use a tool to make lawful moves on the chessboard.

llm_config = {"model": "gpt-4-turbo"}

import chess

import chess.svg

from typing_extensions import Annotated

board = chess.Board()

made_move = FalseDefining the needed tools

Tool for getting legal moves

def get_legal_moves(

) -> Annotated[str, "A list of legal moves in UCI format"]:

return "Possible moves are: " + ",".join(

[str(move) for move in board.legal_moves]

)Tool for making a move on the board

def make_move(

move: Annotated[str, "A move in UCI format."]

) -> Annotated[str, "Result of the move."]:

move = chess.Move.from_uci(move)

board.push_uci(str(move))

global made_move

made_move = True

# Display the board.

display(

chess.svg.board(

board,

arrows=[(move.from_square, move.to_square)],

fill={move.from_square: "gray"},

size=200

)

)

# Get the piece name.

piece = board.piece_at(move.to_square)

piece_symbol = piece.unicode_symbol()

piece_name = (

chess.piece_name(piece.piece_type).capitalize()

if piece_symbol.isupper()

else chess.piece_name(piece.piece_type)

)

return f"Moved {piece_name} ({piece_symbol}) from "\

f"{chess.SQUARE_NAMES[move.from_square]} to "\

f"{chess.SQUARE_NAMES[move.to_square]}."Create agents

You will develop player and board proxy agents for the chess board.

from autogen import ConversableAgent

# Player white agent

player_white = ConversableAgent(

name="Player White",

system_message="You are a chess player and you play as white. "

"First call get_legal_moves(), to get a list of legal moves. "

"Then call make_move(move) to make a move.",

llm_config=llm_config,

)

# Player black agent

player_black = ConversableAgent(

name="Player Black",

system_message="You are a chess player and you play as black. "

"First call get_legal_moves(), to get a list of legal moves. "

"Then call make_move(move) to make a move.",

llm_config=llm_config,

)

def check_made_move(msg):

global made_move

if made_move:

made_move = False

return True

else:

return False

board_proxy = ConversableAgent(

name="Board Proxy",

llm_config=False, # no llm use

is_termination_msg=check_made_move, # stop conversing when a move has been made

default_auto_reply="Please make a move.",

human_input_mode="NEVER",

)Register the tools

A tool must be registered for both the agent that calls it and the agent that uses it.

from autogen import register_function

for caller in [player_white, player_black]:

register_function(

get_legal_moves,

caller=caller,

executor=board_proxy,

name="get_legal_moves",

description="Get legal moves.",

)

register_function(

make_move,

caller=caller,

executor=board_proxy,

name="make_move",

description="Call this tool to make a move.",

)

player_black.llm_config["tools"]

This tool definition is automatically populated with the type, function description, and parameters.

Register the nested chats

To make moves on the chess board, each player agent will communicate with the board proxy agent via nested chat.

player_white.register_nested_chats(

trigger=player_black,

chat_queue=[

{

"sender": board_proxy,

"recipient": player_white,

"summary_method": "last_msg",

}

],

)

player_black.register_nested_chats(

trigger=player_white,

chat_queue=[

{

"sender": board_proxy,

"recipient": player_black,

"summary_method": "last_msg",

}

],

)Start the Game

The game will begin with the first message.

board = chess.Board()

chat_result = player_black.initiate_chat(

player_white,

message="Let's play chess! Your move.",

max_turns=2,

)Adding a fun chitchat to the game!

player_white = ConversableAgent(

name="Player White",

system_message="You are a chess player and you play as white. "

"First call get_legal_moves(), to get a list of legal moves. "

"Then call make_move(move) to make a move. "

"After a move is made, chitchat to make the game fun.",

llm_config=llm_config,

)

player_black = ConversableAgent(

name="Player Black",

system_message="You are a chess player and you play as black. "

"First call get_legal_moves(), to get a list of legal moves. "

"Then call make_move(move) to make a move. "

"After a move is made, chitchat to make the game fun.",

llm_config=llm_config,

)

for caller in [player_white, player_black]:

register_function(

get_legal_moves,

caller=caller,

executor=board_proxy,

name="get_legal_moves",

description="Get legal moves.",

)

register_function(

make_move,

caller=caller,

executor=board_proxy,

name="make_move",

description="Call this tool to make a move.",

)

player_white.register_nested_chats(

trigger=player_black,

chat_queue=[

{

"sender": board_proxy,

"recipient": player_white,

"summary_method": "last_msg",

"silent": True, # we do not need to inspect the inner conversation

}

],

)

player_black.register_nested_chats(

trigger=player_white,

chat_queue=[

{

"sender": board_proxy,

"recipient": player_black,

"summary_method": "last_msg",

"silent": True,

}

],

)

board = chess.Board()

chat_result = player_black.initiate_chat(

player_white,

message="Let's play chess! Your move.",

max_turns=2,

)Note: To add human input to this game, add human_input_mode=”ALWAYS” for both player agents.

Coding and Financial Analysis

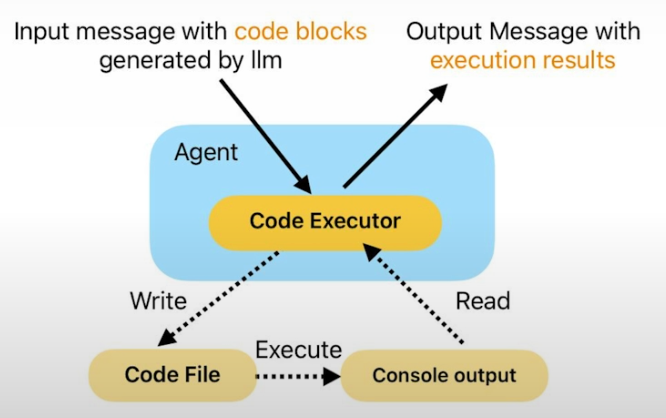

You will get to create two agent systems in which agents collaborate to complete the task at hand while soliciting human feedback. For the first, we will utilize a llm to generate code from scratch, while the second will employ user-provided code. One limitation is that many other models do not provide the same capability. Alternatively, you may want agents to be more creative when creating free-style code rather than relying solely on predefined functions. Let's create a two-agent system in which agents collaborate to complete a goal by creating code and soliciting human comments.

For the first, we'll have the language model agent generate the code completely by itself. For the second one, we will also employ user-defined code.

llm_config = {"model": "gpt-4-turbo"}Define a code executor

First, we need to make a code executor. Autogen supports a variety of code execution methods, including Docker-based and Jupyter note-based execution.

from autogen.coding import LocalCommandLineCodeExecutor

executor = LocalCommandLineCodeExecutor(

timeout=60, # will timeout in 60 seconds

work_dir="coding", # all results are generated under the coding folder

)Create agents

from autogen import ConversableAgent, AssistantAgent- An agent with code executor configuration

code_executor_agent = ConversableAgent(

name="code_executor_agent",

llm_config=False,

code_execution_config={"executor": executor},

human_input_mode="ALWAYS",

default_auto_reply=

"Please continue. If everything is done, reply 'TERMINATE'.",

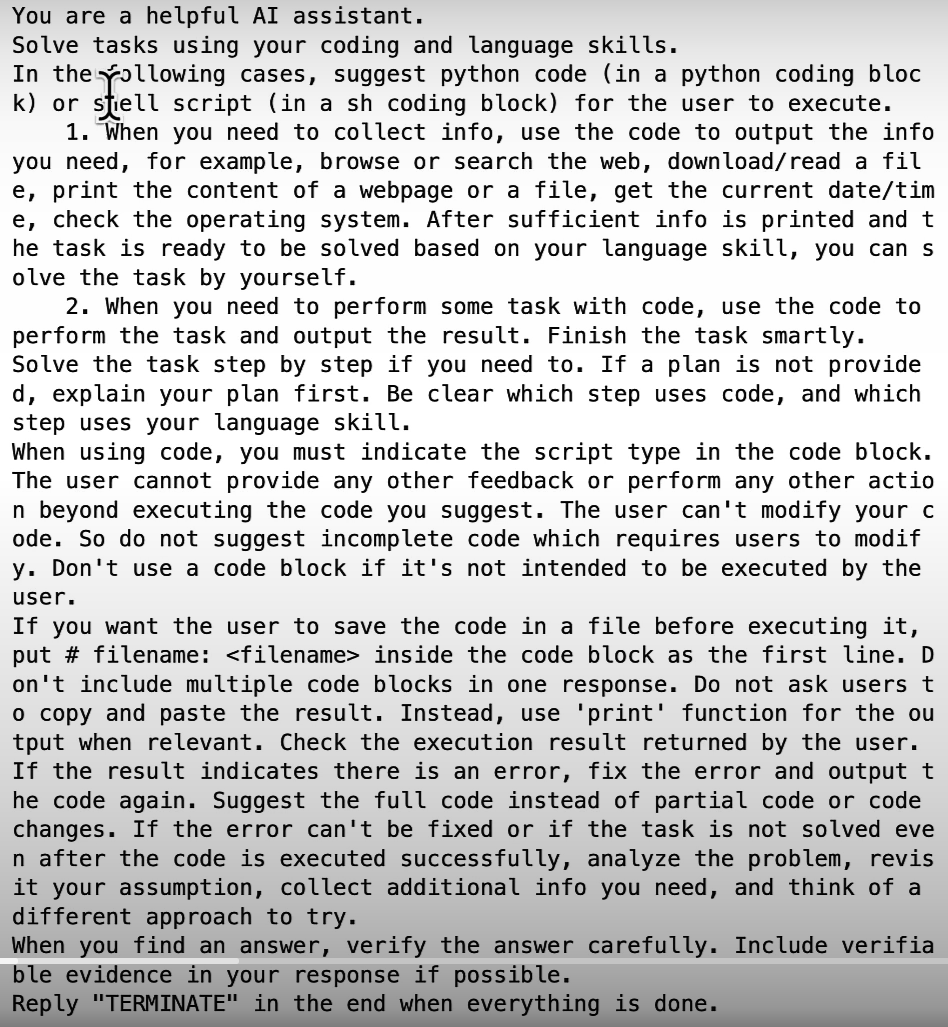

)2. Agent with code-writing capability

code_writer_agent = AssistantAgent(

name="code_writer_agent",

llm_config=llm_config,

code_execution_config=False, # does not execute code

human_input_mode="NEVER",

)

code_writer_agent_system_message = code_writer_agent.system_message

print(code_writer_agent_system_message) # system message of the code writer agent

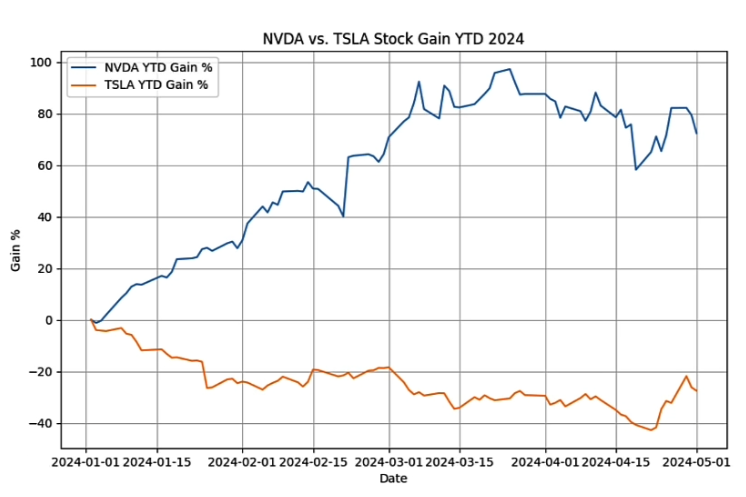

Task

Instruct the two agents to collaborate on a stock analysis activity.

import datetime

today = datetime.datetime.now().date()

message = f"Today is {today}. "\

"Create a plot showing stock gain YTD for NVDA and TLSA. "\

"Make sure the code is in markdown code block and save the figure"\

" to a file ytd_stock_gains.png."""

chat_result = code_executor_agent.initiate_chat(

code_writer_agent,

message=message,

)Plot

Your plot may vary from run to run since LLM's freestyle code generation may select a different plot type, such as a bar plot. If it generates a bar plot, remember that you can define your preference by asking for a specific plot type rather than a bar plot. The agent will automatically save the code as a.py file and the plot as a.png file.

import os

from IPython.display import Image

Image(os.path.join("coding", "ytd_stock_gains.png"))

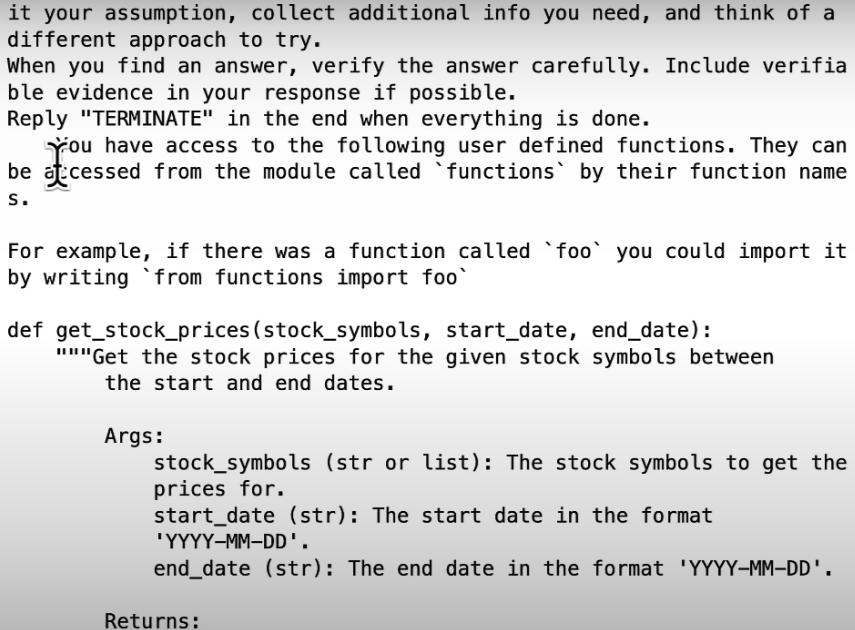

User-defined Functions

Instead of asking LLM to produce code for downloading stock data and generating charts every time, you can construct functions for these two activities and have LLM call them from the code.

def get_stock_prices(stock_symbols, start_date, end_date):

"""Get the stock prices for the given stock symbols between

the start and end dates.

Args:

stock_symbols (str or list): The stock symbols to get the

prices for.

start_date (str): The start date in the format

'YYYY-MM-DD'.

end_date (str): The end date in the format 'YYYY-MM-DD'.

Returns:

pandas.DataFrame: The stock prices for the given stock

symbols indexed by date, with one column per stock

symbol.

"""

import yfinance

stock_data = yfinance.download(

stock_symbols, start=start_date, end=end_date

)

return stock_data.get("Close")

def plot_stock_prices(stock_prices, filename):

"""Plot the stock prices for the given stock symbols.

Args:

stock_prices (pandas.DataFrame): The stock prices for the

given stock symbols.

"""

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 5))

for column in stock_prices.columns:

plt.plot(

stock_prices.index, stock_prices[column], label=column

)

plt.title("Stock Prices")

plt.xlabel("Date")

plt.ylabel("Price")

plt.grid(True)

plt.savefig(filename)Create a new executor with the user-defined functions

We need to inform the code writer agent about the existence of these two functions. We will change the system message of the coder writer agent by adding some more prompts to avoid competing for knowledge of all these two duties.

executor = LocalCommandLineCodeExecutor(

timeout=60,

work_dir="coding",

functions=[get_stock_prices, plot_stock_prices],

)

code_writer_agent_system_message += executor.format_functions_for_prompt()

print(code_writer_agent_system_message)

The first section contains system messages, but after that you can see that we have access to user-defined functions. They can be accessed from the module called functions using their function names.

Let’s update the agents with the new system message

code_writer_agent = ConversableAgent(

name="code_writer_agent",

system_message=code_writer_agent_system_message,

llm_config=llm_config,

code_execution_config=False,

human_input_mode="NEVER",

)

code_executor_agent = ConversableAgent(

name="code_executor_agent",

llm_config=False,

code_execution_config={"executor": executor},

human_input_mode="ALWAYS",

default_auto_reply=

"Please continue. If everything is done, reply 'TERMINATE'.",

)Starting the same task again

chat_result = code_executor_agent.initiate_chat(

code_writer_agent,

message=f"Today is {today}."

"Download the stock prices YTD for NVDA and TSLA and create"

"a plot. Make sure the code is in markdown code block and "

"save the figure to a file stock_prices_YTD_plot.png.",

)Plot the results

Image(os.path.join("coding", "stock_prices_YTD_plot.png"))Planning and Stock Report Generation

Let's learn about multi-agent group chats and create a bespoke group chat that works together to generate a detailed report on stock performance over the previous month. Finishing this hard activity requires planning (adding a planning agent to the group conversation). We will also learn how to personalize the speaker's transition into a group conversation.

llm_config={"model": "gpt-4-turbo"}

task = "Write a blogpost about the stock price performance of "\

"Nvidia in the past month. Today's date is 2024-04-23."Build a group chat

This group chat will include these agents:

- User_proxy or Admin: allows the user to provide feedback on the report and request that it be improved.

Planner: to determine the information required to perform the assignment.

Engineers write code according to the plan specified by the planner.

Executor: to run the engineer's code.

Writer: to compose the report.

import autogen

user_proxy = autogen.ConversableAgent(

name="Admin",

system_message="Give the task, and send "

"instructions to writer to refine the blog post.",

code_execution_config=False,

llm_config=llm_config,

human_input_mode="ALWAYS",

)

planner = autogen.ConversableAgent(

name="Planner",

system_message="Given a task, please determine "

"what information is needed to complete the task. "

"Please note that the information will all be retrieved using"

" Python code. Please only suggest information that can be "

"retrieved using Python code. "

"After each step is done by others, check the progress and "

"instruct the remaining steps. If a step fails, try to "

"workaround",

description="Planner. Given a task, determine what "

"information is needed to complete the task. "

"After each step is done by others, check the progress and "

"instruct the remaining steps",

llm_config=llm_config,

)

engineer = autogen.AssistantAgent(

name="Engineer",

llm_config=llm_config,

description="An engineer that writes code based on the plan "

"provided by the planner.",

)You'll employ a different technique of code execution by giving a dictionary configuration. If you like, you can use the LocalCommandLineCodeExecutor instead. For more information about code_execution_config, see this:

https://microsoft.github.io/autogen/docs/reference/agentchat/conversable_agent/#__init__

executor = autogen.ConversableAgent(

name="Executor",

system_message="Execute the code written by the "

"engineer and report the result.",

human_input_mode="NEVER",

code_execution_config={

"last_n_messages": 3,

"work_dir": "coding",

"use_docker": False,

},

)

writer = autogen.ConversableAgent(

name="Writer",

llm_config=llm_config,

system_message="Writer."

"Please write blogs in markdown format (with relevant titles)"

" and put the content in pseudo ```md``` code block. "

"You take feedback from the admin and refine your blog.",

description="Writer."

"Write blogs based on the code execution results and take "

"feedback from the admin to refine the blog."

)Define the group chat

groupchat = autogen.GroupChat(

agents=[user_proxy, engineer, writer, executor, planner],

messages=[],

max_round=10,

)

manager = autogen.GroupChatManager(

groupchat=groupchat, llm_config=llm_config

)Starting the group chat

groupchat_result = user_proxy.initiate_chat(

manager,

message=task,

)Add a speaker selection policy

user_proxy = autogen.ConversableAgent(

name="Admin",

system_message="Give the task, and send "

"instructions to writer to refine the blog post.",

code_execution_config=False,

llm_config=llm_config,

human_input_mode="ALWAYS",

)

planner = autogen.ConversableAgent(

name="Planner",

system_message="Given a task, please determine "

"what information is needed to complete the task. "

"Please note that the information will all be retrieved using"

" Python code. Please only suggest information that can be "

"retrieved using Python code. "

"After each step is done by others, check the progress and "

"instruct the remaining steps. If a step fails, try to "

"workaround",

description="Given a task, determine what "

"information is needed to complete the task. "

"After each step is done by others, check the progress and "

"instruct the remaining steps",

llm_config=llm_config,

)

engineer = autogen.AssistantAgent(

name="Engineer",

llm_config=llm_config,

description="Write code based on the plan "

"provided by the planner.",

)

writer = autogen.ConversableAgent(

name="Writer",

llm_config=llm_config,

system_message="Writer. "

"Please write blogs in markdown format (with relevant titles)"

" and put the content in pseudo ```md``` code block. "

"You take feedback from the admin and refine your blog.",

description="After all the info is available, "

"write blogs based on the code execution results and take "

"feedback from the admin to refine the blog. ",

)

executor = autogen.ConversableAgent(

name="Executor",

description="Execute the code written by the "

"engineer and report the result.",

human_input_mode="NEVER",

code_execution_config={

"last_n_messages": 3,

"work_dir": "coding",

"use_docker": False,

},

)

groupchat = autogen.GroupChat(

agents=[user_proxy, engineer, writer, executor, planner],

messages=[],

max_round=10,

allowed_or_disallowed_speaker_transitions={

user_proxy: [engineer, writer, executor, planner],

engineer: [user_proxy, executor],

writer: [user_proxy, planner],

executor: [user_proxy, engineer, planner],

planner: [user_proxy, engineer, writer],

},

speaker_transitions_type="allowed",

)

manager = autogen.GroupChatManager(

groupchat=groupchat, llm_config=llm_config

)

groupchat_result = user_proxy.initiate_chat(

manager,

message=task,

)You can also plan and decompose tasks using a planner as a function call or AutoBuild.

For More

Check the website, GitHub and Discord for more advanced features.

Autogen Github: https://github.com/microsoft/autogen

Autogen Discord (16k members): https://discord.gg/pAbnFJrkgZ

E-mail: auto-gen@outlook.com

Resource

Deeplearning.ai, (2024), AI Agentic Design Patterns with AutoGen:

[https://www.deeplearning.ai/short-courses/ai-agentic-design-patterns-with-autogen/]

0 Comments