Deeplearning.ai’daki “Getting Started with Mistral” kursunun Türkçe çevirisidir.

Since this course was published last year, a comparison was conducted with Llama2. The models are refreshed frequently, but the essential concepts remain the same. As a result, I did not disclose information regarding the models.

Introduction

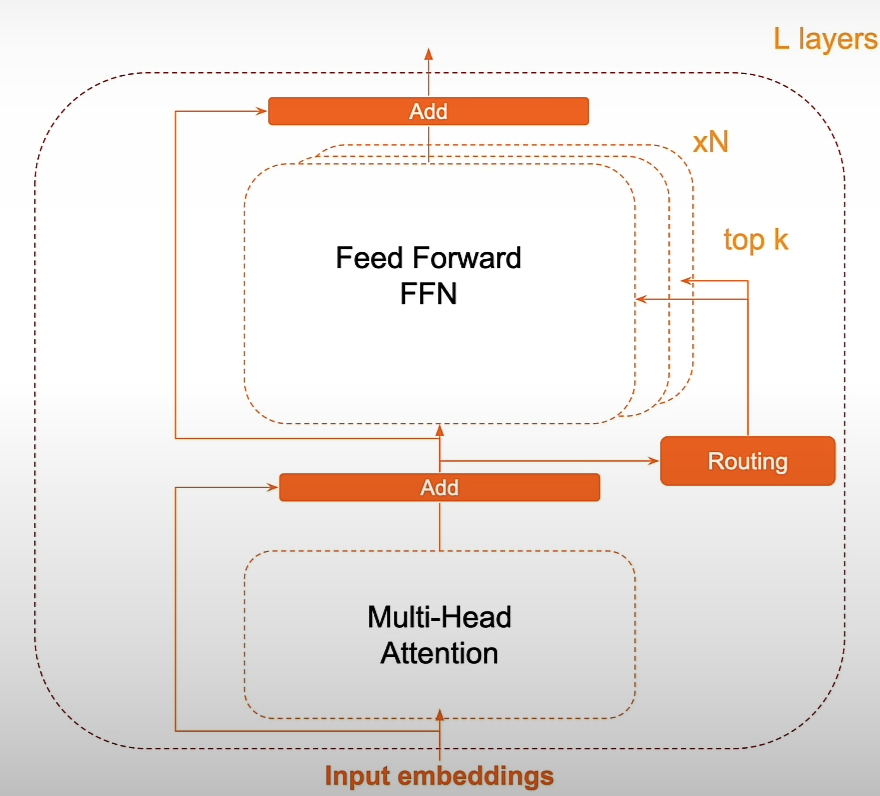

Many popular and effective LLMs are based on the basic transformer design, however Mistral's recent open-source model, Mixtral 8x7B, alters the traditional transformer architecture with a mix of experts (MoE).

This means that there are eight independent feedforward neural networks known as experts, and at inference time, a different gating neural network selects two of these eight experts to run to predict the next token. The weighted average of these expert outputs is then used to generate the next token. This combination of expert design enables the Mixtral Model to achieve the performance gains of a larger model while maintaining inference costs equal to a smaller model. Specifically, despite having 46.7 billion parameters at inference time, the Mixtral model only uses 12.9 of them to forecast each token.

In this course, we will learn how to invoke a function using Mistral's API. This allows you to advise a model to call a user-defined Python function, like as one that performs a web search or retrieves text from a database, in order to assist it acquire the necessary information to respond to a user's request. LLM uses function calling and powers to move safely and efficiently through activities that code excels at, like as obtaining information from a larger database or executing sophisticated calculations.

Overview

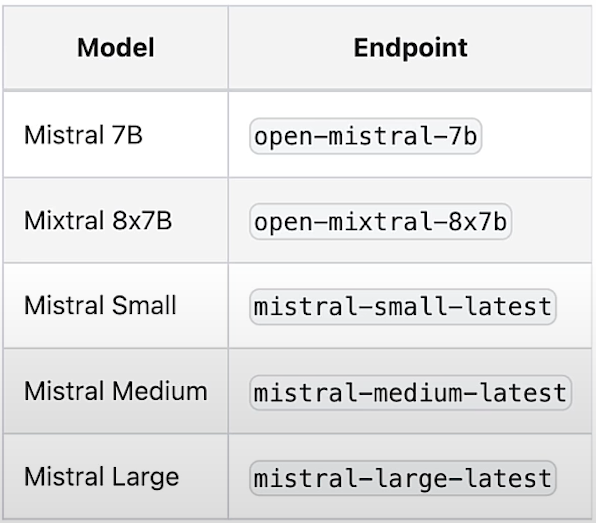

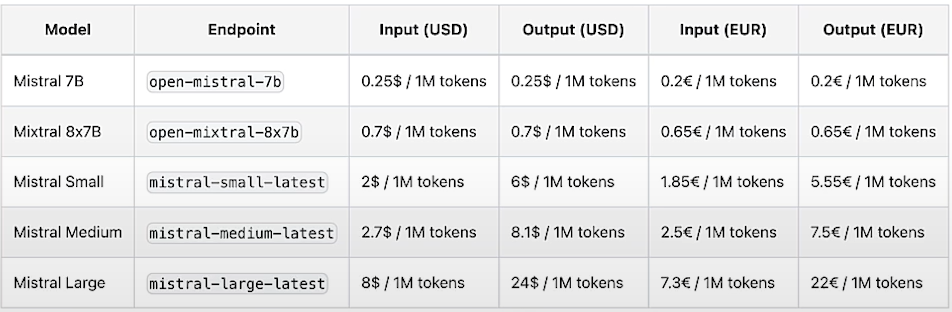

Let's have a look at the numerous Mistral Models, and Mistral aspires to create the best foundation models. Currently, there are six models to meet all use cases and corporate needs.

We evaluate performance on a variety of activities, such as the reason reasoning benchmark, which includes swag, art, easy r challenge, and other tasks. At a high level, assume you have 8 professionals assisting you with your text. Rather than saying all experts, we chose the best professional to assist you.

For additional information, the base of our model is a transformer block made up of two layers: a feedforward layer and a multi-haded attention layer. Each input token passes through the same tiers. How can we increase capacity in the model? We duplicate the feed based on layers and times. But how do we determine which input tokens are sent to which feed-forward layers? We utilize a router to direct each token to the top K feedforward levels while ignoring the rest. As a result, while having 46.7 billion total parameters, Mistral only employs 12.9 billion parameters per token, offering excellent performance and quick inference. It outperforms Llama2-70B and most benchmarks with eight times faster inference, and it matches or outperforms GPT 3.5, one of the most common benchmarks.

If you want to run these models locally, you can use the transformers library, llama.cpp, or ollama. You may need to quantify the model to run it, but it does not function as planned.

Prompting

!pip install mistralai

from helper import load_mistral_api_key

load_mistral_api_key()

from helper import mistral

mistral("hello, what can you do?")

# CLASSIFICATION

prompt = """

You are a bank customer service bot.

Your task is to assess customer intent and categorize customer

inquiry after <<<>>> into one of the following predefined categories:

card arrival

change pin

exchange rate

country support

cancel transfer

charge dispute

If the text doesn't fit into any of the above categories,

classify it as:

customer service

You will only respond with the predefined category.

Do not provide explanations or notes.

###

Here are some examples:

Inquiry: How do I know if I will get my card, or if it is lost? I am concerned about the delivery process and would like to ensure that I will receive my card as expected. Could you please provide information about the tracking process for my card, or confirm if there are any indicators to identify if the card has been lost during delivery?

Category: card arrival

Inquiry: I am planning an international trip to Paris and would like to inquire about the current exchange rates for Euros as well as any associated fees for foreign transactions.

Category: exchange rate

Inquiry: What countries are getting support? I will be traveling and living abroad for an extended period of time, specifically in France and Germany, and would appreciate any information regarding compatibility and functionality in these regions.

Category: country support

Inquiry: Can I get help starting my computer? I am having difficulty starting my computer, and would appreciate your expertise in helping me troubleshoot the issue.

Category: customer service

###

<<<

Inquiry: {inquiry}

>>>

Category:

"""

# Asking Mistral to check the spelling and grammar of your prompt

response = mistral(f"Please correct the spelling and grammar of \

this prompt and return a text that is the same prompt,\

with the spelling and grammar fixed: {prompt}")

print(response)

# Trying out the model

mistral(

response.format(

inquiry="I am inquiring about the availability of your cards in the EU"

)

)

# Information Extraction with JSON Mode

medical_notes = """

A 60-year-old male patient, Mr. Johnson, presented with symptoms

of increased thirst, frequent urination, fatigue, and unexplained

weight loss. Upon evaluation, he was diagnosed with diabetes,

confirmed by elevated blood sugar levels. Mr. Johnson's weight

is 210 lbs. He has been prescribed Metformin to be taken twice daily

with meals. It was noted during the consultation that the patient is

a current smoker.

"""

prompt = f"""

Extract information from the following medical notes:

{medical_notes}

Return json format with the following JSON schema:

{{

"age": {{

"type": "integer"

}},

"gender": {{

"type": "string",

"enum": ["male", "female", "other"]

}},

"diagnosis": {{

"type": "string",

"enum": ["migraine", "diabetes", "arthritis", "acne"]

}},

"weight": {{

"type": "integer"

}},

"smoking": {{

"type": "string",

"enum": ["yes", "no"]

}}

}}

"""

response = mistral(prompt, is_json=True)

print(response)

# Personalization

email = """

Dear mortgage lender,

What's your 30-year fixed-rate APR, how is it compared to the 15-year

fixed rate?

Regards,

Anna

"""

prompt = f"""

You are a mortgage lender customer service bot, and your task is to

create personalized email responses to address customer questions.

Answer the customer's inquiry using the provided facts below. Ensure

that your response is clear, concise, and directly addresses the

customer's question. Address the customer in a friendly and

professional manner. Sign the email with "Lender Customer Support."

# Facts

30-year fixed-rate: interest rate 6.403%, APR 6.484%

20-year fixed-rate: interest rate 6.329%, APR 6.429%

15-year fixed-rate: interest rate 5.705%, APR 5.848%

10-year fixed-rate: interest rate 5.500%, APR 5.720%

7-year ARM: interest rate 7.011%, APR 7.660%

5-year ARM: interest rate 6.880%, APR 7.754%

3-year ARM: interest rate 6.125%, APR 7.204%

30-year fixed-rate FHA: interest rate 5.527%, APR 6.316%

30-year fixed-rate VA: interest rate 5.684%, APR 6.062%

# Email

{email}

"""

response = mistral(prompt)

print(response)

# Summarization

newsletter = """

European AI champion Mistral AI unveiled new large language models and formed an alliance with Microsoft.

What’s new: Mistral AI introduced two closed models, Mistral Large and Mistral Small (joining Mistral Medium, which debuted quietly late last year). Microsoft invested $16.3 million in the French startup, and it agreed to distribute Mistral Large on its Azure platform and let Mistral AI use Azure computing infrastructure. Mistral AI makes the new models available to try for free here and to use on its La Plateforme and via custom deployments.

Model specs: The new models’ parameter counts, architectures, and training methods are undisclosed. Like the earlier, open source Mistral 7B and Mixtral 8x7B, they can process 32,000 tokens of input context.

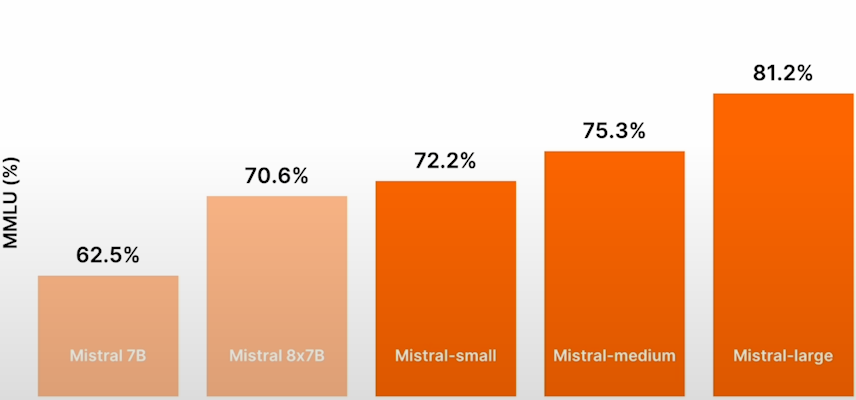

Mistral Large achieved 81.2 percent on the MMLU benchmark, outperforming Anthropic’s Claude 2, Google’s Gemini Pro, and Meta’s Llama 2 70B, though falling short of GPT-4. Mistral Small, which is optimized for latency and cost, achieved 72.2 percent on MMLU.

Both models are fluent in French, German, Spanish, and Italian. They’re trained for function calling and JSON-format output.

Microsoft’s investment in Mistral AI is significant but tiny compared to its $13 billion stake in OpenAI and Google and Amazon’s investments in Anthropic, which amount to $2 billion and $4 billion respectively.

Mistral AI and Microsoft will collaborate to train bespoke models for customers including European governments.

Behind the news: Mistral AI was founded in early 2023 by engineers from Google and Meta. The French government has touted the company as a home-grown competitor to U.S.-based leaders like OpenAI. France’s representatives in the European Commission argued on Mistral’s behalf to loosen the European Union’s AI Act oversight on powerful AI models.

Yes, but: Mistral AI’s partnership with Microsoft has divided European lawmakers and regulators. The European Commission, which already was investigating Microsoft’s agreement with OpenAI for potential breaches of antitrust law, plans to investigate the new partnership as well. Members of President Emmanuel Macron’s Renaissance party criticized the deal’s potential to give a U.S. company access to European users’ data. However, other French lawmakers support the relationship.

Why it matters: The partnership between Mistral AI and Microsoft gives the startup crucial processing power for training large models and greater access to potential customers around the world. It gives the tech giant greater access to the European market. And it gives Azure customers access to a high-performance model that’s tailored to Europe’s unique regulatory environment.

We’re thinking: Mistral AI has made impressive progress in a short time, especially relative to the resources at its disposal as a startup. Its partnership with a leading hyperscaler is a sign of the tremendous processing and distribution power that remains concentrated in the large, U.S.-headquartered cloud companies.

"""

prompt = f"""

You are a commentator. Your task is to write a report on a newsletter.

When presented with the newsletter, come up with interesting questions to ask,

and answer each question.

Afterward, combine all the information and write a report in the markdown

format.

# Newsletter:

{newsletter}

# Instructions:

## Summarize:

In clear and concise language, summarize the key points and themes

presented in the newsletter.

## Interesting Questions:

Generate three distinct and thought-provoking questions that can be

asked about the content of the newsletter. For each question:

- After "Q: ", describe the problem

- After "A: ", provide a detailed explanation of the problem addressed

in the question.

- Enclose the ultimate answer in <>.

## Write a analysis report

Using the summary and the answers to the interesting questions,

create a comprehensive report in Markdown format.

"""

response = mistral(prompt)

print(response)Mistral Python Client

The helper function you imported from helper.py and used previously in this notebook is shown below. For further information, see the Mistral AI API documentation. To obtain your own Mistral AI API key to use outside of this classroom, register an account and navigate to the terminal to subscribe and generate an API key.

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

def mistral(user_message,

model="mistral-small-latest",

is_json=False):

client = MistralClient(api_key=os.getenv("MISTRAL_API_KEY"))

messages = [ChatMessage(role="user", content=user_message)]

if is_json:

chat_response = client.chat(

model=model,

messages=messages,

response_format={"type": "json_object"})

else:

chat_response = client.chat(

model=model,

messages=messages)

return chat_response.choices[0].message.contentModel Selection

- mistral-small-laters: Simple jobs that can be completed in bulk (classification, customer service, or text production).

Mistral-medium-latest: Intermediate activities that necessitate moderate reasoning.

mistral-large-latest: Complex activities that demand advanced reasoning skills or are very specialized (synthetic text generation, code generation, RAG, or agents).

from helper import load_mistral_api_key

api_key, dlai_endpoint = load_mistral_api_key(ret_key=True)

import os

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

def mistral(user_message, model="mistral-small-latest", is_json=False):

client = MistralClient(api_key=api_key, endpoint=dlai_endpoint)

messages = [ChatMessage(role="user", content=user_message)]

if is_json:

chat_response = client.chat(

model=model, messages=messages, response_format={"type": "json_object"}

)

else:

chat_response = client.chat(model=model, messages=messages)

return chat_response.choices[0].message.content

# Mistral Small : Good for simple tasks, fast inference, lower cost. For example below is a classification example:

prompt = """

Classify the following email to determine if it is spam or not.

Only respond with the exact text "Spam" or "Not Spam".

# Email:

🎉 Urgent! You've Won a $1,000,000 Cash Prize!

💰 To claim your prize, please click on the link below:

https://bit.ly/claim-your-prize

"""

mistral(prompt, model="mistral-small-latest")

# Mistral Medium: Good for intermediate tasks such as language transformation. Below is composing text based on provided context such as writing a customer service email based on purchase information.

prompt = """

Compose a welcome email for new customers who have just made

their first purchase with your product.

Start by expressing your gratitude for their business,

and then convey your excitement for having them as a customer.

Include relevant details about their recent order.

Sign the email with "The Fun Shop Team".

Order details:

- Customer name: Anna

- Product: hat

- Estimate date of delivery: Feb. 25, 2024

- Return policy: 30 days

"""

response_medium = mistral(prompt, model="mistral-medium-latest")

print(response_medium)

# Mistral Large: Good for complex tasks that require advanced reasoning. For example math and reasoning example:

prompt = """

Calculate the difference in payment dates between the two \

customers whose payment amounts are closest to each other \

in the following dataset. Do not write code.

# dataset:

'{

"transaction_id":{"0":"T1001","1":"T1002","2":"T1003","3":"T1004","4":"T1005"},

"customer_id":{"0":"C001","1":"C002","2":"C003","3":"C002","4":"C001"},

"payment_amount":{"0":125.5,"1":89.99,"2":120.0,"3":54.3,"4":210.2},

"payment_date":{"0":"2021-10-05","1":"2021-10-06","2":"2021-10-07","3":"2021-10-05","4":"2021-10-08"},

"payment_status":{"0":"Paid","1":"Unpaid","2":"Paid","3":"Paid","4":"Pending"}

}'

"""

response_small = mistral(prompt, model="mistral-small-latest")

print(response_small)

response_large = mistral(prompt, model="mistral-large-latest")

print(response_large)

# Expense reporting task

transactions = """

McDonald's: 8.40

Safeway: 10.30

Carrefour: 15.00

Toys R Us: 20.50

Panda Express: 10.20

Beanie Baby Outlet: 25.60

World Food Wraps: 22.70

Stuffed Animals Shop: 45.10

Sanrio Store: 85.70

"""

prompt = f"""

Given the purchase details, how much did I spend on each category:

1) restaurants

2) groceries

3) stuffed animals and props

{transactions}

"""

response_small = mistral(prompt, model="mistral-small-latest")

print(response_small)

response_large = mistral(prompt, model="mistral-large-latest")

print(response_large)

# Writing and checking code

user_message = """

Given an array of integers nums and an integer target, return indices of the two numbers such that they add up to target.

You may assume that each input would have exactly one solution, and you may not use the same element twice.

You can return the answer in any order.

Your code should pass these tests:

assert twoSum([2,7,11,15], 9) == [0,1]

assert twoSum([3,2,4], 6) == [1,2]

assert twoSum([3,3], 6) == [0,1]

"""

print(mistral(user_message, model="mistral-large-latest"))Function Calling

!pip install pandas "mistralai>=0.1.2"

from helper import load_mistral_api_key

api_key, dlai_endpoint = load_mistral_api_key(ret_key=True)

import pandas as pd

data = {

"transaction_id": ["T1001", "T1002", "T1003", "T1004", "T1005"],

"customer_id": ["C001", "C002", "C003", "C002", "C001"],

"payment_amount": [125.50, 89.99, 120.00, 54.30, 210.20],

"payment_date": [

"2021-10-05",

"2021-10-06",

"2021-10-07",

"2021-10-05",

"2021-10-08",

],

"payment_status": ["Paid", "Unpaid", "Paid", "Paid", "Pending"],

}

df = pd.DataFrame(data)

print(df)

# How you might answer data questions without function calling: Not recommended, but an example to serve as a contrast to function calling.

data = """

"transaction_id": ["T1001", "T1002", "T1003", "T1004", "T1005"],

"customer_id": ["C001", "C002", "C003", "C002", "C001"],

"payment_amount": [125.50, 89.99, 120.00, 54.30, 210.20],

"payment_date": [

"2021-10-05",

"2021-10-06",

"2021-10-07",

"2021-10-05",

"2021-10-08",

],

"payment_status": ["Paid", "Unpaid", "Paid", "Paid", "Pending"],

}

"""

transaction_id = "T1001"

prompt = f"""

Given the following data, what is the payment status for \

transaction_id={transaction_id}?

data:

{data}

"""

import os

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

def mistral(user_message, model="mistral-small-latest", is_json=False):

client = MistralClient(api_key=api_key, endpoint=dlai_endpoint)

messages = [ChatMessage(role="user", content=user_message)]

if is_json:

chat_response = client.chat(

model=model, messages=messages, response_format={"type": "json_object"}

)

else:

chat_response = client.chat(model=model, messages=messages)

return chat_response.choices[0].message.content

response = mistral(prompt)

print(response)

# Step 1. User: specify tools and query

# Tools: You can define all tools that you might want the model to call

import json

def retrieve_payment_status(df: data, transaction_id: str) -> str:

if transaction_id in df.transaction_id.values:

return json.dumps(

{"status": df[df.transaction_id == transaction_id].payment_status.item()}

)

return json.dumps({"error": "transaction id not found."})

status = retrieve_payment_status(df, transaction_id="T1001")

print(status)

type(status)

def retrieve_payment_date(df: data, transaction_id: str) -> str:

if transaction_id in df.transaction_id.values:

return json.dumps(

{"date": df[df.transaction_id == transaction_id].payment_date.item()}

)

return json.dumps({"error": "transaction id not found."})

date = retrieve_payment_date(df, transaction_id="T1002")

print(date)

# You can outline the function specifications with a JSON schema.

tool_payment_status = {

"type": "function",

"function": {

"name": "retrieve_payment_status",

"description": "Get payment status of a transaction",

"parameters": {

"type": "object",

"properties": {

"transaction_id": {

"type": "string",

"description": "The transaction id.",

}

},

"required": ["transaction_id"],

},

},

}

type(tool_payment_status)

tool_payment_date = {

"type": "function",

"function": {

"name": "retrieve_payment_date",

"description": "Get payment date of a transaction",

"parameters": {

"type": "object",

"properties": {

"transaction_id": {

"type": "string",

"description": "The transaction id.",

}

},

"required": ["transaction_id"],

},

},

}

type(tool_payment_status)

tools = [tool_payment_status, tool_payment_date]

type(tools)

print(tools)

# functools

import functools

names_to_functions = {

"retrieve_payment_status": functools.partial(retrieve_payment_status, df=df),

"retrieve_payment_date": functools.partial(retrieve_payment_date, df=df),

}

names_to_functions["retrieve_payment_status"](transaction_id="T1001")

print(tools)

# User Query : What is the status of my transaction? example.

from mistralai.models.chat_completion import ChatMessage

chat_history = [

ChatMessage(role="user", content="What's the status of my transaction?")

]

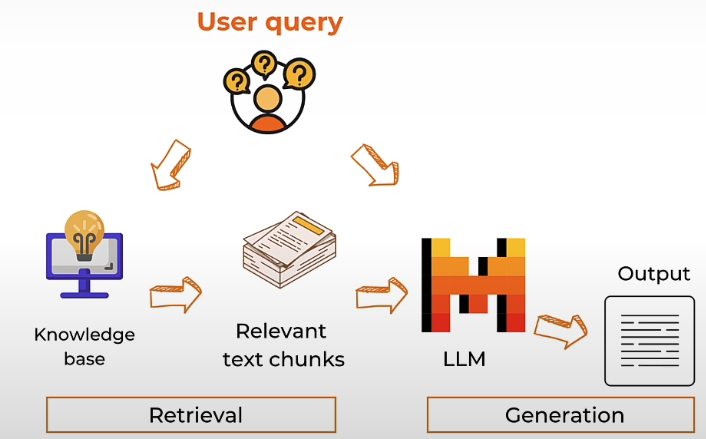

RAG from Scratch

! pip install faiss-cpu "mistralai>=0.1.2"

from helper import load_mistral_api_key

api_key, dlai_endpoint = load_mistral_api_key(ret_key=True)

# Parse the article with BeautifulSoup

import requests

from bs4 import BeautifulSoup

import re

response = requests.get(

"https://www.deeplearning.ai/the-batch/a-roadmap-explores-how-ai-can-detect-and-mitigate-greenhouse-gases/"

)

html_doc = response.text

soup = BeautifulSoup(html_doc, "html.parser")

tag = soup.find("div", re.compile("^prose--styled"))

text = tag.text

print(text)

# Optionally, save the text into a text file

file_name = "AI_greenhouse_gas.txt"

with open(file_name, 'w') as file:

file.write(text)

# Chunking

chunk_size = 512

chunks = [text[i : i + chunk_size] for i in range(0, len(text), chunk_size)]

len(chunks)

# Get embeddings of the chunks

import os

from mistralai.client import MistralClient

def get_text_embedding(txt):

client = MistralClient(api_key=api_key, endpoint=dlai_endpoint)

embeddings_batch_response = client.embeddings(model="mistral-embed", input=txt)

return embeddings_batch_response.data[0].embedding

import numpy as np

text_embeddings = np.array([get_text_embedding(chunk) for chunk in chunks])

text_embeddings

len(text_embeddings[0])

# Store in a vector database

import faiss

d = text_embeddings.shape[1]

index = faiss.IndexFlatL2(d)

index.add(text_embeddings)

# Embed the user query

question = "What are the ways that AI can reduce emissions in Agriculture?"

question_embeddings = np.array([get_text_embedding(question)])

question_embeddings

# Search for chunks that are similar to the query

D, I = index.search(question_embeddings, k=2)

print(I)

retrieved_chunk = [chunks[i] for i in I.tolist()[0]]

print(retrieved_chunk)

prompt = f"""

Context information is below.

---------------------

{retrieved_chunk}

---------------------

Given the context information and not prior knowledge, answer the query.

Query: {question}

Answer:

"""

from mistralai.models.chat_completion import ChatMessage

def mistral(user_message, model="mistral-small-latest", is_json=False):

client = MistralClient(api_key=api_key, endpoint=dlai_endpoint)

messages = [ChatMessage(role="user", content=user_message)]

if is_json:

chat_response = client.chat(

model=model, messages=messages, response_format={"type": "json_object"}

)

else:

chat_response = client.chat(model=model, messages=messages)

return chat_response.choices[0].message.content

response = mistral(prompt)

print(response)Rag + Function Calling

def qa_with_context(text, question, chunk_size=512):

# split document into chunks

chunks = [text[i : i + chunk_size] for i in range(0, len(text), chunk_size)]

# load into a vector database

text_embeddings = np.array([get_text_embedding(chunk) for chunk in chunks])

d = text_embeddings.shape[1]

index = faiss.IndexFlatL2(d)

index.add(text_embeddings)

# create embeddings for a question

question_embeddings = np.array([get_text_embedding(question)])

# retrieve similar chunks from the vector database

D, I = index.search(question_embeddings, k=2)

retrieved_chunk = [chunks[i] for i in I.tolist()[0]]

# generate response based on the retrieve relevant text chunks

prompt = f"""

Context information is below.

---------------------

{retrieved_chunk}

---------------------

Given the context information and not prior knowledge, answer the query.

Query: {question}

Answer:

"""

response = mistral(prompt)

return response

I.tolist()

I.tolist()[0]

import functools

names_to_functions = {"qa_with_context": functools.partial(qa_with_context, text=text)}

tools = [

{

"type": "function",

"function": {

"name": "qa_with_context",

"description": "Answer user question by retrieving relevant context",

"parameters": {

"type": "object",

"properties": {

"question": {

"type": "string",

"description": "user question",

}

},

"required": ["question"],

},

},

},

]

question = """

What are the ways AI can mitigate climate change in transportation?

"""

client = MistralClient(api_key=api_key, endpoint=dlai_endpoint)

response = client.chat(

model="mistral-large-latest",

messages=[ChatMessage(role="user", content=question)],

tools=tools,

tool_choice="any",

)

response

tool_function = response.choices[0].message.tool_calls[0].function

tool_function

tool_function.name

import json

args = json.loads(tool_function.arguments)

args

function_result = names_to_functions[tool_function.name](**args)

function_resultMore about RAG

To learn about more advanced chunking and retrieval methods, you can check out:

- Advanced Retrieval for AI with Chroma

- Sentence window retrieval

- Auto-merge retrieval

- Building and Evaluating Advanced RAG Applications

- Query Expansion

- Cross-encoder reranking

- Training and utilizing Embedding Adapters

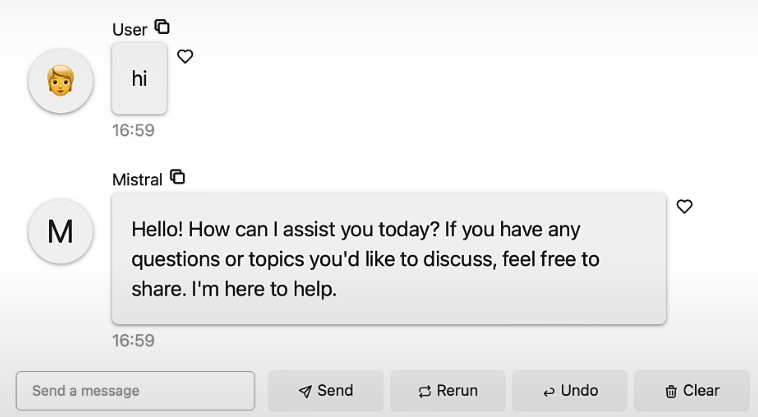

Chat Interface

from helper import load_mistral_api_key

import os

from mistralai.models.chat_completion import ChatMessage

from mistralai.client import MistralClient

api_key, dlai_endpoint = load_mistral_api_key(ret_key=True)

# Panel: Panel is an open source python library that you can use to create dashboards and apps

import panel as pn

pn.extension()

# Basic Chat UI

def run_mistral(contents, user, chat_interface):

client = MistralClient(api_key=api_key, endpoint=dlai_endpoint)

messages = [ChatMessage(role="user", content=contents)]

chat_response = client.chat(

model="mistral-large-latest",

messages=messages)

return chat_response.choices[0].message.content

chat_interface = pn.chat.ChatInterface(

callback=run_mistral,

callback_user="Mistral"

)

chat_interface

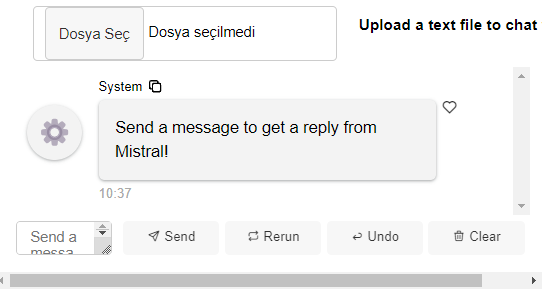

# Connecting the Chat interface with your user-defined function

file_input = pn.widgets.FileInput(accept=".txt", value="", height=50)

chat_interface = pn.chat.ChatInterface(

callback=answer_question,

callback_user="Mistral",

header=pn.Row(file_input, "### Upload a text file to chat with it!"),

)

chat_interface.send(

"Send a message to get a reply from Mistral!",

user="System",

respond=False

)

chat_interface

Reference

[1] Deeplearning.ai, (2023), Getting Started with Mistral:

[https://learn.deeplearning.ai/courses/getting-started-with-mistral/]

0 Comments